A multi-disciplinary approach to complexity

There is a podcast accompanying this article.

The Internet is huge. With mobile phones and other devices now being hooked up as well as computers, it will soon comprise many billions of endpoints. In sheer size and complexity the Internet is not far off the human brain with its hundred billion neurons linked up by around ten thousand trillion individual connections. If you're finding it hard even to read these huge numbers off the page, how can anyone be expected to cope with complexity on this vast scale?

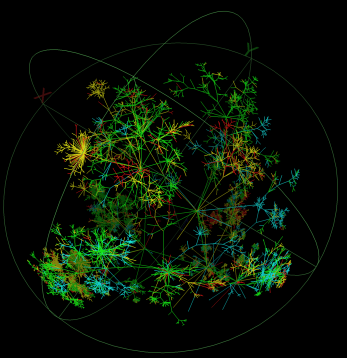

A partial view of the Internet, as displayed in an exhibition at the Museum of Modern Art in New York. Image created at CAIDA, © The Regents of the University of California, all rights reserved.

Before the age of computers, scientists dealt with complexity by ignoring as much of it as possible, looking for underlying simplicity instead. But advanced technology, apart from creating its very own exceedingly complex beasts, now provides the computing power to tackle complexity head-on. Not only because it enables us to analyse very large amounts of data, but also because it allows us to simulate complex interactions between a comparatively small number of different agents, as for example those that determine our climate. Mathematical tools to challenge complexity in its various guises have experienced rapid development in recent years, but with complex systems coming up in so many different areas, not everyone is benefitting from the proceeds of new understanding as much as they could. What is still missing is an overarching science of complexity, a mathematical toolbox serving everyone who's dealing with it in whatever shape or form.

This month a group of experts from a range of different areas got together in Cambridge to try and remedy the situation. It was the first meeting of the Cambridge Complex Systems Consortium, an initiative of Clare Hall College at the University of Cambridge, formed by its former president Ekhard Salje, with sponsorship from Lockheed Martin UK. "This multi-disciplinary team of Cambridge-based academic and industry leaders aims to study complexity across multi-dimensional domains," says Michelle Tuveson of Lockheed Martin UK, who is an associate of Clare Hall College and organised the meeting. "We aim to develop a science around complex systems by determining good frameworks, optimisation strategies, and prioritisation schemes."

The effort is met with enthusiasm by those who've decided to devote their working lives to grappling with complex systems. "I have no doubt that in the coming decades we will find a core of ideas concerned with complex networks, which is straight-forward, simple to explain and has many, many applications," says Frank Kelly, Professor of the Mathematics of Systems at the University of Cambridge's Centre for Mathematical Sciences, who took part in the meeting. Kelly works on information and traffic networks of all sorts, including the Internet, which is threatening to become too slow to cope with the huge volumes of data that are now being transferred between computers. The transmission control protocol, the few lines of code that orchestrate the transmission of files, is in need of updating. The Internet's vast size means that you can't create an exact model of this network, to see how it works and to test new ideas.

Interestingly, from a mathematical view point, size isn't necessarily a problem: "The Internet's size actually helps techniques that are based on looking at asymptotic behaviour," says Kelly. "When things are large enough, regularities and statistical patterns can emerge that are useful for understanding behaviour." As an example, think of emails arriving at a server. People's reasons for sending emails are many and varied, and one email can prompt several others. There is no global, deterministic model of this complex and interconnected system. But if you take a snapshot in time, the pattern of email arrivals will look pretty random. Mathematicians have a way of describing random processes using probability distributions, and the more events you're dealing with, the stronger the predictive power of these distributions. This is a bit like flipping a coin: you can't predict the outcome of a single flip, but the law of large numbers means that you have a good idea of the distribution of heads and tails when you flip it many times. "If you look at short time scales," says Kelly, "then the things that happen in those time scales have lots of different causes and that produces simplicity."

Neurons communicate through electrical impulses. Their interconnection is the basis of brain function. Image courtesy the National Institute on Aging.

As a network, the Internet is a product of evolution as much as design, and unsurprisingly shares several features with networks that are found in nature. The human brain is one of these, as Ed Bullmore, Professor of Psychiatry at the University of Cambridge explains: "Most of the functions of the brain emerge from the coordinated activity of more than one neuron, so to understand how the brain works, you have to understand the simultaneous activity of networks of neurons." Scientists are not yet able to resolve the brain down to cellular scales, but using brain imaging techniques, they can look for connections between different areas of the brain. Bullmore and his colleagues looked at the brain activity of volunteers who were relaxing (yes, your brain is still busy even when you're resting) and the transient networks they saw had a feature now well-known to network theorists: they were small world networks.

"Small world networks have a topological organisation somewhere between a random network [with randomly connected nodes] and a regular network [where each node has the same number of connections]," says Bullmore. "Like a regular network, a small world network has a high degree of clustering, that is high connectivity between nearest neighbours, but unlike a regular network, it has a small average path length between pairs of nodes." In other words: there are tightly-knit local neighbourhoods, but also reasonably quick connections between areas that are further apart. For neuroscientists, this was an interesting discovery: "Before this discovery was made, there had been a tension about how to explain how the brain works," says Bullmore. "We know that some brain functions, for example aspects of visual perception, are highly specialised and localised, but other functions, like working memory or attention, appear to be more distributed across the brain. The small world topology solves the tension: it gives you both the capacity for segregated localised processing and highly efficient distributive processing."

Discovering features of this kind is not just beneficial for understanding brain function, but also brain malfunction: tests of patients with schizophrenia have shown that the underlying problem here may well be with their brain's network configuration.

The weather: hard to predict, but potentially deadly. This image shows hurricane Katrina seen from space. Image courtesy NASA.

But complexity is not just about large networks. Climate scientists, represented at the Cambridge consortium by Hans Graf, Professor for Environmental Systems Analysis at Cambridge's geography department, deal with systems made up of relatively few components. In simple terms, the Earth's climate is determined by three factors: the Sun's energy, the way the Earth absorbs and reflects this energy, and transitions between different energy forms within the climate system.

All of these are well-understood: they are governed by the classical laws of radiation, thermodynamics and hydrodynamics and can be modelled by differential equations. It's the interaction between these factors that causes problems. One difficulty is that there is a gap between the macro models and the micro models: "In climate models we can only resolve the atmosphere down to a few kilometres," says Graf, "but processes on the microscale are also important." These microscale processes include, for example, evaporation and condensation of water vapor: the resulting precipitation comes with an effective release of latent heat to the atmosphere, and is a major driver of circulation. The current challenge is to find ways of combining what we know about the micro level with global models.

Climate scientists are also faced with a far more fundamental challenge: they are dealing with fully-fledged, unavoidable chaos. Climate systems are extremely sensitive to initial values and this is reflected in the mathematical models: when you plug today's weather values into the mathematical model, then the smallest discrepancy in your values can lead to hugely different outcomes — it's the butterfly effect in action. This means that you can't forecast the weather long into the future. "Anybody who says that they know something precise about the weather in a month's time is a charlatan," says Graf. "Weather forecasts are useful between 24 hours and five days. The problem is to have the initial conditions right. Our observational systems are not dense enough to get the initial data right, and in a chaotic system this makes a huge difference."

This doesn't mean that long-term forecasts of, for example, global warming are meaningless. As with the Internet, statistical approaches help to simplify things: "We are not saying anything about how temperature will change in a certain place at a certain time," explains Graf. Instead, scientists come up with probability distributions of weather events spread over larger geographical areas and over time. One statistic that is often quoted (despite not being the most useful one) is global mean temperature. "For the global mean [a forecast] is relatively easy: it is determined by a small number of independent parameters," says Graf. Current models predict that if we double the amount of greenhouse gasses in the atmosphere, then global mean temperature will rise by between two and a half and six centergrade.

Alongside the long-term threat of global warming there is the immediate threat of terrorism. Here, too, new tools are needed to cope with increasing complexity. "Ten years ago counter terrorism was difficult, but not complex," says Sir Richard Dearlove, who was head of MI6 from 1999 until 2004. Back then British counter terrorism efforts were concerned chiefly with the Provisional IRA, a highly structured and strategic organisation constrained by a clear set of political objectives. The IRA was sophisticated, but predictable. By contrast, movements like Al Qaida resemble flocks of birds: they are formless entities constantly changing in structure and size.

The aftermath of 9/11. Terrorism is now more complex than ever.

To avert the next terrorist strike, essential information has to be distilled from a jungle of sources external to the terrorist movement, each complex in its own right: the Internet, mobile phone networks, the international banking system, travel data, and CCTV footage. In terms of mathematics this means data mining — producing software packages that can spot hidden patterns in data — and also designing networks linking government and commercial agencies that have relevant information, something government departments haven't always been good at in the past.

If this sends your skin crawling, you're not alone. Our use of technology opens up a greater Big Brother potential than ever before, and Dearlove is well aware that measures taken must be proportional to the threats posed. Here too the interdisciplinary approach may prove fruitful: rational risk assessment has been explored extensively in other areas of business and science, medicine being the most obvious.

Probably the oddest one out at the Cambridge meeting was David Good of the Faculty for Social and Political Science at Cambridge. Good focussed on human communication at its most natural: conversation. Conversation is almost too fundamental to analyse. There is no limit to the things we can say and we can say them in many different ways — a wink and a smile are just as important as words and grammar. Attempts to formally pin down conversations, for example using game theory or automated grammar systems, have been made, but most have failed. "The study of conversation has ventured into a very rich, intriguing, and ill-defined swamp," says Good. "A formal account of what we do in conversation is probably a long way off, but the simple fact of the matter is that we human actors do it, and do it in a determinative manner which is intelligible by other humans. Therefore it is a process which is well-specified, but the nature of the specification eludes us for now. The value of looking at specific mathematical models is as a stimulus and reminder that we can target this class of theorising."

Stimulus is really what this first meeting of the Complex Systems Consortium was about. An overarching theory of complex systems is still a long way off, and some seemingly universal features of complexity may yet turn out to be red herrings. For the moment, people are exchanging ideas and surveying each other's work. "Numerous collaborations have already resulted from the meeting," says organiser Michelle Tuveson, "and plans are underway for potentially establishing a centre for complexity studies in Cambridge." Such a centre wouldn't be the first in the world to focus on complex systems, but if the consortium sticks to its current multi-disciplinary approach, then it would be the first of its kind in sheer breadth of purpose.

There is a podcast accompanying this article.