You may not have heard his name, but you're making use of his work many times over every single day: Claude Shannon, hailed by many as the father of the information age, would have turned 100 this week, on April 30 2016.

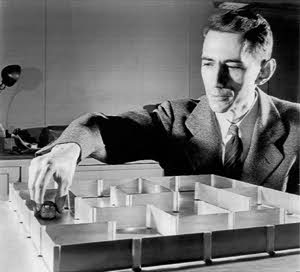

Claude Shannon playing with his electromechanical mouse Theseus, one of the earliest experiments in artificial intelligence. (Image Copyright 2001 Lucent Technologies, Inc. All rights reserved.)

Today the idea that all types of information, be it pictures, music or words, can be encoded in strings of 0s and 1s is almost commonplace. But back in the 1940s and 50s, when Shannon produced some of his ground-breaking work, it was entirely new. It was Shannon who realised that these binary digits, or bits, lay at the heart of information technology. He worked out the minimum number of bits you need to encode the symbols from any alphabet, be it the 26 letters we use to write with, or the numbers that encode the colours in a picture. And he figured out how this minimum number is related to the amount of information you can transmit over any medium, be it a phone line or (in today's world) the internet. Amazingly, he showed that even if your transmission method sometimes scrambles up some of your signals, there is always a clever way of encoding your message that makes the error rate as small as you like and still guarantees a decent transmission rate. Such clever codes are called error correcting codes and they are what enables you to receive your information nicely and clearly most of the time.

Our favourite insight of Shannon's, however, involves the link between electronics and logic. What makes computers so powerful is that they can follow logical instructions, such as "if the password entered is incorrect, display an error message". But computers work on electronic circuits that, at first sight, have nothing to do with logic — so how can they perform these logical tasks? The answer comes from a system of logic that was devised long before the advent of modern computers, in the nineteenth century, by the mathematician George Boole. Boole's binary logic is based on the idea that every statement (such as "the cat is black") is either true or false. Combining statements with connectives such as AND and OR, it's possible to construct composites as complex as you like (for example, "the cat is black AND the dog is white AND I am hungry"). Boole represented such combinations using operations akin to multiplication and addition, but only using the numbers 0 (for false) and 1 (for true). This made it easier to work out the truth value of a composite statement, depending on the truth values of its components.

Shannon realised that Boole's so-called algebra was relevant to electrical circuits, which can be closed so that a current runs through them (represented by a 1) or open so that no current runs (represented by a 0). This analogy, Shannon realised, meant that Boolean algebra could be used to design and simplify electrical circuits. There are circuits (called logic gates) that exactly mimic Boole's logical operations — this link between logic, bits and electronics is what enables computers to perform those logical tasks. Shannon's masters thesis, in which he outlined his Boolean ideas, has been described as "possibly the most important, and also the most famous, masters thesis of the century".

But information transmission wasn't Shannon's only interest. He was famed for riding his unicycle along the corridors at work, sometimes while juggling, and worked on a motorised pogo stick as well as mechanical maze-solving mice. He definitely was one of the most important mathematicians of the twentieth century.

To find out more about Shannon's work, read

- George Boole and the wonderful world of 0s and 1s, which has a closer look at the connection between Boolean algebra and logic.

- Coding theory: the first 50 years, which explores the error correcting codes that stop messages from being scrambled.

- Information is surprise, which explains Shannon's measure of information, called the Shannon entropy.

- Information is bits, which looks at how to best encode a message into strings of 0s and 1s.

- Information is noisy, which looks at Shannon's work on sending information through noisy channels.

- Maths in a minute: Boolean algebra, which gives some more details on rules of Boolean algebra.

- Maths in a minute: Simplifying circuits, which illustrates how Shannon used Boolean algebra to simplify circuit design.

- Snakes and adders, which explores how computers work, based on one of Shannon's inventions, using an example.

Comments

Claude Shannon Google Doodle

He created juggling robot in 1970's and died in 2001...

Chess and juggling were favorite activities of Shannon so he turned his genius to creating machine versions of them. https://www.youtube.com/watch?v=7fKXfwOu0Dw