The ability to see order in chaos has won the mathematician Yakov G. Sinai the 2014 Abel Prize. The Prize, named after the Norwegian mathematician Niels Henrik Abel, is awarded annually by the Norwegian Academy of Science and Letters and is viewed by many as on par with a Nobel Prize.

Yakov Sinai.

Chaos is something we see all around us every day. The weather, the fluctuations of the stockmarket, the eddies in our coffee as we stir in the milk, even the arrival times of buses display an unpredictability we would term chaotic. Yet, as mathematicians including Sinai have shown, it's possible to get some grip on chaotic systems by understanding their overall behaviour.

Playing with chaos

A good example of a dynamical system is a billiard ball moving around on a billiard table. You set the ball in motion, it moves towards one of the four walls, bounces off, moves on, bounces off another wall, and so on. If you assume that there is no friction and the ball doesn't lose speed when it bounces off a wall, it will keep going forever, tracing out a trajectory as it goes.

On the face of it this looks like a dynamical system that is easy to understand. If you know your law of reflection (the angle of incidence equals the angle of reflection), you can calculate the trajectory of the ball way into the future, until you get bored or run out of breath.

But there are other questions you can ask. For example, what if you shoot a second ball from the same location as the first, but in a direction that is ever so slightly different? Will the trajectories of the two balls stay close to each other, or will the balls eventually end up in wildly different places? And what if you alter the shape of the table, allowing more than four walls positioned at various angles to each other, or even curvy walls?

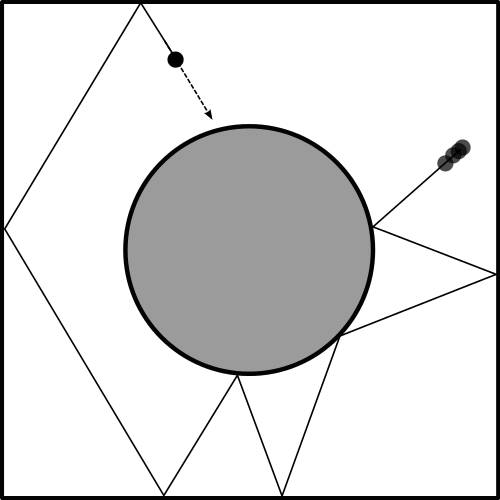

The dynamics of such generalised games of billiard is one area to which Sinai has made significant contributions. He is particularly famous for a type of billiard that now carries his name. In it the idealised ball (idealised because it is assumed to be a massless point immune to friction) moves around a square table which has a disc removed from its centre (see the image on the left).

A trajectory in Sinai billiard. Image: G. Stamatiou.

This version of billiard truly deserves to be called a chaotic dynamical system. If you start two balls off with slightly different initial conditions (that is, position and direction of motion) their trajectories will soon diverge wildly, in fact the divergence grows exponentially. This means that the game is extremely unpredictable: since in practice you will never know the initial conditions of a ball with 100% accuracy you simply can't tell where the ball will end up even after a relatively short length of time. (For a rectangular billiard table the two trajectories may also diverge, but in this case the growth of the convergence is constant, rather than exponential, so it's easier to keep a handle on things).

Sinai also showed that the trajectory of a single ball will eventually cover virtually all of the table (this is a loose way of saying that all trajectories are ergodic).

All this might not appear particularly significant, given that humanity's interest in billiard is limited, and that those who play it usually do so on a rectangular table. But Sinai's motivation lay elsewhere. He wanted to understand the behaviour of an ideal gas, a theoretical model of a gas in which molecules are imagined to behave like point particles moving around a square, bouncing off its walls and also off each other. Sinai's square-with-a-hole version of billiard is a very simplified version of such a gas. The first chaotic dynamical system ever to be studied systematically was developed by Jaques Hadamard in 1898. One of Sinai's breakthrough results was to show that the trajectories of molecules in his particular model of billiard behave just like the trajectories in Hadamard's chaotic system.

Entropy

The little explorations above hint at how you might come to grips with complicated dynamical systems. You try to quantify to what extent the trajectories go "wild". One of Sinai's greatest contributions to the theory of dynamical system is yet another way to quantify a system's complexity. It's called the Kolomogorov-Sinai entropy, honouring the contribution of Andrey Kolmogorov alongside Sinai's.

The concept of entropy was first developed at the end of the nineteenth century in the context of thermodynamics. The entropy of a physical system is a measure of the amount of disorder it contains. For example, in a block of ice all water molecules are locked in a rigid lattice. That's a very ordered situation, so the entropy is low. In water vapour, by contrast, the molecules whizz around randomly, bouncing off each other as they go. That's a much more disordered situation, and the entropy is high. (This is slightly simplifying the definition of entropy, see this article for more precise information.)

Entropy applies to ice as well as messages.

One important thing to notice is that disorder, and therefore entropy, is related to information: it takes a lot more information to describe the velocities of zillions of individual molecules moving around randomly than it takes to describe the rigid lattice of an ice block. This link between entropy and information was exploited in 1948 by the mathematician Claude E. Shannon, who was interested in how information can be transmitted, for example over the telephone. Shannon developed a mathematical notion of entropy that measures how densely information is packed into a message. As an example, the sentence,

"I, erm, kind of, uhm, quite like you,"

has the same meaning as the sentence,

"I fancy you,"

but the former uses a lot more symbols than the latter. That is, the amount of information per symbol is lower in the first than in the second sentence. According to Shannon's definition, the first sentence has a lower entropy than the first: the higher the information density, the higher the entropy.The entropy of a message also tells you something about what to expect from the next unit (eg word) in the message. If the entropy is high, then the next word is likely to contain new information. And since you generally don't know what that information is, the next word is unpredictable. If, however, the entropy is very low, then the additional information provided by the next word is quite likely to be zero. For example, it might be an "uhm" or an "er", or simply confirm what you already know. You might not be able to predict the exact word, but you can predict its information content to be low. In that sense, a message with high entropy is less predictable than a message with low entropy.

Inspired by Shannon's definition of entropy, and the work of Kolmogorov, Sinai developed a notion of entropy that applies to a certain class of dynamical systems. It measures the unpredictability of the system: the higher the entropy, the more unpredictable it is. And since unpredictability is closely related to disorder, this notion of entropy links back nicely to the thermodynamic origin of the idea. Sinai-Kolomogorov entropy has proved extremely useful in understanding complex dynamical systems. As Arne B. Sletsjøe writes in a short essay on Sinai's work, "the concept has shown its strength through the highly adequate answers to central problems in the classification of dynamical systems."

It is for these and many other "fundamental contributions to dynamical systems, ergodic theory, and mathematical physics" that Sinai has been awarded the Abel Prize. It carries a cash award of nearly £600,000 and will be handed to Sinai by Crown Prince Haakon of Norway on May 20 2014.

You can find out more about the Abel Prize and Sinai's work on the Abel Prize website.

Comments

typo

"lose speed" not "loose speed".

PLUS May 2014: entropy : grappling with chaos (Sinai)

I think Shannon's entropy is akin to negative entropy (ie information) :

ie rather the opposite of the thermodynamics model.

Philip Bradfield

Dunfermline