If I told you that 25% of the sky overhead is filled with clouds, what would that tell you about the weather? Not much is the answer. The 25% could amount to one giant cloud about to unleash a biblical downpour, or it could spread across lots of lovely little clouds that only heighten your joy at an otherwise sunny day. To predict the weather from the clouds, you need to know more.

Clouds make much of the weather.

It's surprising, then, that current state-of-the-art weather models use crude approximations of clouds, such as the cloud fraction we just described. The reason is that clouds tend to be small and that weather models can't take account of what is going on in every small piece of the sky. If they did, then even the fastest supercomputer would never finish the calculations necessary to produce a forecast. Even a drastic increase in computing power would not suffice to solve this problem.

"There's a big difference between having one huge cloud and having lots of very small sporadic clouds," says Teo Deveney, a mathematician at the University of Bath. "That will cause quite a large difference in how the weather will behave, but the [weather] models that are currently being used don't account for this."

Hope is on the horizon, though, coming from a slightly different direction. Rather than trying to speed up the calculations, perhaps we could use the power of computers to learn complex tasks by looking at large amounts of data. Machine learning is a form of artificial intelligence which does just that, and which is currently making inroads into all sorts of areas of life, from helping with your online shopping to healthcare. If the idea works for meteorology, then weather forecasts would become more accurate while at the same time needing less computing power than current weather models do.

Weather forecasting the traditional way

The weather is a result of the motion of the Earth's atmosphere and the oceans and the way moisture is moved around in the atmosphere, coupled to changes in the pressure and temperature of the air. The atmosphere and the oceans are fluids — liquids or gases. Luckily for meteorologists, there's a set of equations that describes the behaviour of fluids, called the Navier-Stokes equations.The general idea behind a weather forecast is relatively straight-forward. You start by taking measurements of factors that describe the current weather, such as temperature, air pressure and density, wind speed and the moisture content of the air. You then feed these starting values to a mathematical model that is built on the Navier-Stokes equations and which allows you to evolve the weather forward in time on a computer.

In practice, though, there are several things that make weather forecasting tricky. Firstly, you can't possibly measure temperature, pressure, moisture, etc, at every single point on the Earth or region you're interested in. Secondly, you can't measure them with infinite accuracy. The famous butterfly effect then means that the inevitable inaccuracies can grow very large as the computer makes the calculations, potentially producing a forecast that is way off. Thirdly, making the calculations involved in a weather model requires a huge amount of computing power (that's due to the trickiness of the Navier-Stokes equations, find out more in this article).

Pixelating the Earth

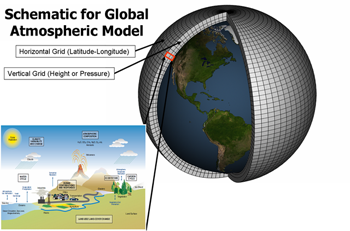

To be able to produce a forecast at all, weather modellers divide the Earth and its atmosphere into a grid, much like a TV or computer monitor divides an image up into pixels. And just as every pixel on a monitor is assigned a single colour, so every grid box is assigned just a single value for each of pressure, moisture, temperature, and so on — a value that comes from a manageable number of measurements taken in an individual grid box. This makes calculations tractable. The butterfly effect can then be mitigated using techniques such as ensemble forecasting (see here to find out more).

Weather models divide the Earth and its atmosphere into a grid. Image: NOAA.

In current state-of-the-art weather models the grid points are about 1.5km apart in the horizontal direction and 300m in the vertical direction: even fast supercomputers can't handle a higher resolution. Clouds can of course be much smaller than that and they can do all manner of weird and wonderful things inside a single grid box. Other processes too happen at a scale that's smaller than the grid boxes.

To take account of such processes, weather models estimate them using mathematical formulae that roughly describe their physics. Such estimates are called parameterisations.

"A parameterisation is a sub program which calculates the physics [of what is happening inside a grid box] that then links in with the grid scale," explains Chris Budd, a mathematician at the University of Bath and an expert in weather forecasting as well as machine learning. The fraction of sky covered in clouds in a single grid box is one quantity that is parameterised in this way. "Apart from clouds, there are parameterisations for things like radiation from the Sun, waves that ripple through the atmosphere caused by gravity, and the friction that wind experiences when blowing over the Earth's surface," says Budd.

How can AI help?

Machine learning is about computer algorithms learning how to spot patterns in data and then putting that pattern spotting ability to good use. A classic example here involves learning to tell pictures of cats from pictures of dogs. To teach a machine learning algorithm to do that, you first feed it lots of pictures of cats and lots of pictures of dogs, together with the correct answer for each picture; whether it's a cat or a dog.

In a mathematical process that seems miraculous but is highly effective, the algorithm then churns through the pictures, tuning internal parameters until it gets the correct answer for a very high proportion of the training pictures. You can then feed it new pictures of cats and dogs and it'll be able to tell what animal is shown with a high degree of accuracy. To find out more about how this works, see this article.

When it comes to weather forecasts, the hope is that machine learning algorithms could learn, by looking at lots of real life observations of the weather, how to determine some of the detail of what is happening inside a grid box from the numbers associated to the grid box as a whole. If they can, then the algorithms could be incorporated in weather models, replacing existing parameterisations and allowing the models to include more detailed information about sub-grid processes — including more detail about the behaviour and organisation of clouds.AI to the test

Budd and Deveney are both members of a research project called Maths4DL (mathematics for deep learning) which explores a range of potential applications of machine learning as well as the mathematics that underpins it. Together with colleagues they supervise a project of former Masters student Matthew Coward which, in collaboration with the Met Office, tests the idea that machine learning could provide more information about clouds.

The total surface area of these cirrus clouds is bigger than that of a ball of cloud of the same volume. Image: Famartin, CC BY-SA 4.0.

The first thing that needs to be decided for such a test is what information about clouds we'd like a machine algorithm to learn. The answer Coward came up with is based on a beautiful result from geometry: if a fixed percentage of a volume of sky is filled with clouds, then the surface area of the entire cloud mass tends to be smaller when the cloud mass is all bunched up than when it is split of into lots of little clouds (see here for a mathematical justification of this claim).

Therefore, the surface area of the entire cloud mass, also called the cloud perimeter, is a good proxy for what kind of clouds there are in a grid box — large cumulus clouds or wispy cirrus clouds. It's also a useful parameter to improve other parameterisations and algorithms, for example algorithms that predict the transfer of radiation through clouds (see this article to find out more).

The question is whether a machine learning algorithm can estimate the cloud perimeter within a single grid box from numbers that are assigned to the grid box as a whole. "This was the target for [Coward's] project: to machine learn the cloud perimeter based on a bunch of environmental factors," says Deveney.

To train the algorithm Coward used an impressive collection of observations of real-life clouds recorded in Oklahoma, USA. "They had a bunch of cameras set up in a 6km3 [volume of space]," explains Deveney. "The cameras could read, at a metre grid scale, whether there was a cloud present or not." Records of the clouds were taken every 20 seconds over a period of three years, resulting in what Coward calls an "entirely unique insight into the life cycle of clouds".

Coward used this data to train two machine learning algorithms. (To be precise they are deep learning algorithms, find out what this means here). Once they were trained, he compared the algorithms' predictions of the cloud perimeter to the actual cloud perimeter as observed by the Oklahoma cameras.

The better of the two algorithms perceived a percentage error of 16%. That's not zero, but it's also not huge. Indeed, the best-known way of parameterising the cloud perimeter without using machine learning has a percentage error of nearly 24% (see Coward's poster to find out more). Thus, machine learning beats non-machine learning by over a third in this case.

Proof of concept

Coward's project counts as one of a number of initial tests to see whether machine learning has to offer anything at all to weather forecasting. "[Machine learning] is a very new approach for people in the field to pursue," says Deveney. "We're at a stage now where most of it is experimental, where people are trying different things and trying to come up with new techniques and seeing how they perform." For an overview of some of the things that are being tried see this article.

The hope is that machine learning could eventually be employed to figure out , not just clouds, but also other phenomena that happen inside the grid boxes of weather models. If the approach works and artificial intelligence ends up having a role to play in the forecast you see on your weather app we will be sure to let you know.

About this article

Chris Budd OBE is Professor of Applied Mathematics at the University of Bath. Teo Deveney is Research Associate at the Department of Mathematical Sciences at the University of Bath. Both are part of Maths4DL, a joint research programme of the Universities of Bath and Cambridge and University College London, which explores the mathematics of deep learning.

Marianne Freiberger, Editor of Plus, interviewed Budd and Deveney in December 2022.