The human eye is a marvellous thing. You, I, and most people without vision problems, can see objects further away than we could travel in our lifetimes. The Sun, clearly visible on this cloudless summer's day, is 149 million kilometres away. And tonight, when I look at the stars, I'll be looking across light years. "We can see pretty much as far as you like," says Ben Allanach, professor of theoretical physics at the University of Cambridge. "If you look at a star, that's light years away, you can see it as long as the object is reasonably bright."

Seeing stars

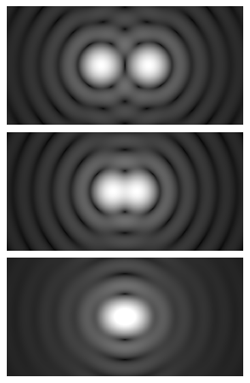

Two airy disks at various spacings.

There are limits, though, to what our eyes can see. One of these is angular resolution. Your eye sees light rays reflected off (or generated by) objects. These light rays pass through your pupil and are focussed by the lens in your eye on the retina. The size of your pupil (similar to the aperture of a camera) determines by how much you can tell two things apart depending on the angle between the corresponding light rays from those objects.

Light consists of waves and, just as a flow of water will spread out after it has passed under a narrow bridge, the light spreads out – or diffracts – as it passes through a gap. This can lead to light waves interfering and creating diffraction patterns as has been famously demonstrated by the double slit experiment. This spreading of light creates an airy disc – the limit to how focussed a spot of light can be after the light has passed through a circular hole. Two different light sources can only be distinguished if their airy discs do not overlap. This limit, the angular resolution, is described by the angle  , between the light rays as they enter the eye. The angular resolution depends on the size of the hole (

, between the light rays as they enter the eye. The angular resolution depends on the size of the hole ( , its diameter measured in metres) and the wavelength (

, its diameter measured in metres) and the wavelength ( ) of the light:

) of the light:

![\[ \theta \approx 1.22 \frac{\lambda }{d}. \]](/MI/0fb7a815c894e6b9e3a76fea16748d89/images/img-0004.png) |

Over the rainbow

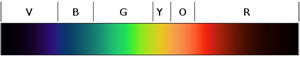

Another limit to our vision is related to the frequency of the light we can see. Light consists of electromagnetic waves travelling at approximately 3x108 metres per second. These waves can come with different frequencies and corresponding wavelengths. The colours we see result from the wavelength and frequency of the light wave – red has a wavelength of 620-750 nanometres and a frequency of 400-484 terrahertz, across to violet at 280-450 nanometres and 668-789 terrahertz.

The visible spectrum from violet (left) to red (right).

The light we can see spans the colours of the rainbow, but light waves fill the air around us in frequencies above and below that visible spectrum. Eyes of different construction to ours can see this ultraviolet or infrared light. "Humans don't see ultraviolet, but insects do," says Allanach. For example, as well as having compound eyes made up of thousands of lenses, bees also have additional light receptor cells that respond to ultraviolet light. (You can read more about the super powers of bees here.)

An interesting illustration of observation of colour comes from the Norwegian Neil Harbisson. Harbisson is completely colour-blind, a very rare condition that only happens in men. "He sees the world in black and white," says Allanach. But Harbisson, who recognises himself as a cyborg, has enhanced his body to allow him to experience colour. He's had a camera implanted on his head that turns colours it captures into sound.

"The sound gets transmitted through his bone, into his ear," says Allanach. "He can hear colours. When the phone rings, it sounds purple." Harbisson's experience of colour switches one sense – sight – for another – hearing – something called synesthesia. "And he's had it enhanced so he can see, for example, infrared light. Apparently when someone uses an old-school remote near him, he hears it." (You can watch a TED Talk by Harbisson here.)

More than human

Neil Harbisson. Image: Dan Wilton/The Red Bulletin, CC BY 2.0.

This sounds extreme, but we've been in the business of enhancing our bodies and our senses for a long time. From ear trumpets, to glasses and contact lenses, and the advancements of cochlear implants – humans have been finding ways to overcome their physical limitations. And scientifically we have been extending our observational abilities since the seventeenth century. The ancient Greeks used magnifying glasses and the development of microscopes in the seventeenth century allowed new insights in biology. Galileo Galilei built one of the first telescopes and discovered moons orbiting Jupiter in 1610. As bigger lenses are used you increase the angular resolution, allowing astronomers to see smaller objects and separate stars that are really close.

Microscope and telescopes both use lenses to blow up the magnification of the observed objects. "But when you get down to a certain level, say of a molecule, no [optical] microscope is going to help you see that," says Allanach. So you have to use other devices such as electron microscopes and scanning probe microscopes.

Mathematics and technology can now allow us to see so much more, even inside our very selves. Magnetic resonance imaging (MRI) works by making the magnetic fields in water molecules oscillate and detecting the resulting disturbances of an electromagnetic field. (You can read more about MRI here.) MRI machines reconstruct an image using mathematical techniques implemented in a computer program, allowing us to see where the water is in the body. Rather than just amplifying our human senses, like glasses or a microscope, the machine reconstructs the image for us from the information it senses. "It's not seeing in the usual sense," says Allanach.

But is it really that different from our normal human experience of seeing? "When we see something, nothing gets to the centre of our brain without going through some visual processing," says Allanach. "There's already processing before you're aware of it, before your CPU, the computer in the middle of your brain, gets the information. So it's not as different observing something through some instrument that you've built, than it is just observing it yourself."

About the article

Ben Allanach is a Professor in Theoretical Physics at the Department of Theoretical Physics and Applied Mathematics at the University of Cambridge. His research focuses on discriminating different models of particle physics using LHC data. He worked at CERN as a research fellow and continues to visit frequently.

Rachel Thomas is Editor of Plus.

This article is part of our Who's watching? The physics of observers project, run in collaboration with FQXi. Click here to see more articles about the limits of observation.