Changing the face of science

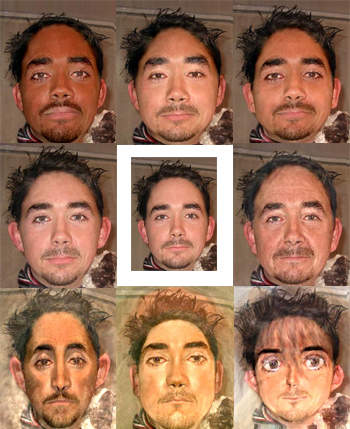

The many faces of Marc. Clockwise from top left: Afro-Caribbean, East Asian, West Asian, Older Gentleman, Manga Character, Botticelli painting, El Greco style, Teenager. Centre is the photo used for the manipulation

Face of the Future is an EPSRC funded public engagement project, run out of the University of St Andrews, that explores the latest advances in facial computer vision and graphics, and their societal implications. The project has developed a number of interactive exhibits that have toured such places as the Science Museum and the BA Festival of Science in York. They have also produced online demos and a lecture presentation aimed at engaging the public with the technology.

The face transformer manipulates your facial features so that you look older, younger, like a different ethnic group or even a particular art style. The team behind the face transformer collected facial information for people of different ages and races, and created "average faces" for the different groups through a method known as facial prototyping.

Facial prototyping derives the average facial shape and colouration from a collection of faces — perhaps a collection of elderly Caucasian males, or a group of young Chinese females. It is a representation of the consistencies across a collection of faces.

Prototyping is a three stage process:

- Identify the location of similar feature points on the source images — for example the eyes, mouth, cheek bones etc. Around 160 facial features are identified. These feature points are used to divide the image into a set of triangles in which each feature location (P) is defined within a triangle (ABC) by using two vectors (V1=AB and V2=AC) where\ $ \lambda_1 $ and\ $ \lambda_2 $ are variables that define how far along each vector the facial feature lies: \ $$ P = \lambda _1 V_1 + \lambda _2 V_2 $$

- Calculate the average shape for the group and warp the faces into that configuration — this is a normalisation process which makes the faces nominally of the same shape and orientation. The average left and right eye positions for the whole population are calculated and all feature points are translated, scaled and rotated so they then reside at the average point for that feature across the sample. This normalises each face to map the left eye to the average left eye position and the right eye to the average right eye position;

- Blend the images together to provide an average image.

Once the facial prototype has been determined, it is then possible to take another photo and "morph" it towards the prototype. After uploading a photo of yourself and telling the program your age and ethnicity, as well as your eye and mouth position (to normalise your photo against the group to which you belong — in my case, young adult male Caucasians), your facial features are moved to the positions of the facial features of the prototype of the group into which you are morphing. This may then result in a change in eye shape, hair line and general facial shape. Your facial colourings will also become that of the prototype.

It is also possible to come up with hybrids — you can even give yourself chimp characteristics. This process combines 50% of your normalised photo with 50% of the chimp prototype. To calculate an image which is n% image A and 100-n% image B, a set of feature points that are n% between the feature points from image A and image B is calculated. The images are then warped to this intermediary shape and blended together with the weighting specified by n. This is achieved by manipulating the variables\ $ \lambda_1 $ and\ $ \lambda_2 $ defined earlier.

More morphed photos of Marc can be found on flickr, whilst more information on the transformation process can be found at Perception Lab.