Computers, maths and minds

Many of us own computers, and we (well, most of us) have a rough idea that computers are made up of complicated hardware and software. But perhaps few of us know that the concept of a computer was envisioned long before these machines became ubiquitous items in our homes, offices and even pockets. And as we will see later, some have even suggested that our own brains are embodiments of this theoretical concept.

The first electronic computer was built during the second world war at Bletchley Park, as a device to crack the German Enigma code (see here to find out more). Instrumental in its construction was Alan Turing, who in 1936 had developed the theoretical machine mentioned above. To understand it, let's start with the underlying mathematical concept of a function. Mathematical functions are roughly like machines in that they take in some data and churn out some information. (There are many different metaphors on functions, but let's stick to this one.) They are sometimes also called mappings or transformations. An example is the function f(n) = 2n: given an input value for n, say n = 2, the function returns the value f(2) = 2 x 2 = 4.

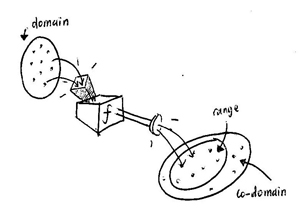

Figure 1: The idea of a function.

In order to specify a function it's not enough to come up with a formula, or other type of rule, like the one above. You also need to specify the domain and co-domain. The data, or input, we feed this machine, has to come from a set of elements; that's the domain. On the other hand, the information, or output, churned out by this machine belongs to another set of elements; that's the co-domain. Figure 1 illustrates these ideas. In our example above, if the domain consists of all the natural numbers, then the co-domain consists of all even natural numbers. If we specified the domain to consist of all real numbers on the number line, then the co-domain would also consist of all real numbers.

Functions play a fundamental role in mathematics, but pragmatists who wish to apply these wonderful ideas to build technological devices need to find ways to implement them in machines. A very good example of this is the computer, which processes instructions using algorithms. To paraphrase the Berkeley computer scientist Christos Papadimitriou, algorithms are "precise, unambiguous, mechanical, efficient, correct" procedures to generate outputs given inputs. In short, they are the means by which a function is executed on a machine.

What happens, though, if we try to conceptualise this entire procedure? Could we imagine an abstract entity that describes the whole "computer-algorithm-input-output" jazz?

Turing machines

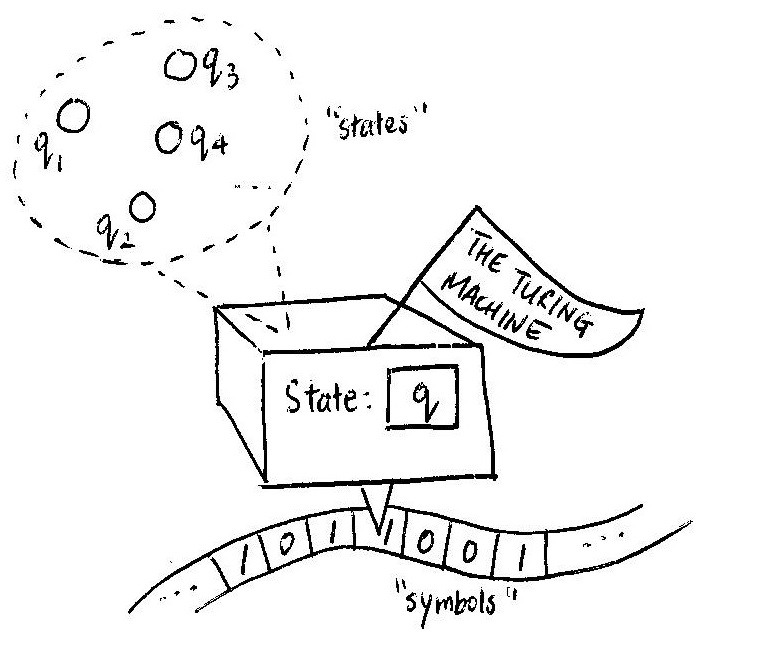

This is exactly what Turing did. He proposed a theoretical model of a computer, now called the Turing machine. A simplified version of the machine looks like this:

After reading off a symbol, the reader can either leave it as it is, or erase it and replace it with a new one. It then moves either one step to left or to the right and reads the symbol there, or stops altogether. Which one of these actions it takes, and what new symbol it writes if indeed it does, depends on the state it's in. Concurrently with these actions its state may also change. When it then meets the next symbol on the left or right, it reacts according to the state it is now in. The transitory instructions that tell the machine what to do at each step are collectively termed the machine's program.

An interesting way to capture a Turing machine's program is to use quintuples of symbols. For example, the quintuple $$0q1Rq^\prime$$ would signify "if you are reading a 0 and are in state $q$, then replace the 0 by a 1, move one step to the right and change your state to $q^\prime.$" Another quintuple now dictates what the machine is to do when it finds the symbol 1 after moving to the right. For example, $$1q^\prime1Rq^{\prime \prime}$$ tells the machine to leave the 1 as is, move another step to the right, and change to state $q^{\prime \prime}.$Given an input — a tape full of symbols — the Turing machine generates an output. And by programming it correctly, you can get it to perform all sorts of calculations. An example is the addition of positive natural numbers. If you want to add the two numbers 2 and 4, prepare your input tape with 1s in the first two positions, then a 0 in the next position along, followed by four 1s in the following four positions, and set all the other positions to 0. So you have

110111100000.....

The rules for the machine to follow are:

- State 0: If current position has a 1, move one step to the right;

- State 0: If current position has a 0, replace it with a 1, move one cell to the right and change state to state 1;

- State 1: If current position has a 1, move one cell to the right;

- State 1: If current position has a 0, move one cell to left and change state to state 2;

- State 2: If current position has a 1, replace by a 0 and stop.

By following these rules, the machine changes the 0 position inbetween the 1s to a 1 and goes to the end of the string of 1s and erases the last one. In this way, we get six 1s in a row, representing the desired result.

It could of course happen that a program results in the machine keeping on going forever, for example by entering an infinite loop. The problem of determining whether a given program will ever stop led to some very interesting developments in mathematical logic in its own right, see this article. But let's continue with our notion of functions.

Church, Turing and the Church-Turing thesis

Suppose we are given some mathematical function $f$ with the set of natural numbers as its domain, and a Turing machine is able to generate the output $f(n)$ for each input $n$. Then let's call $f$ Turing computable. Another way to see this is that the Turing machine model "works" for our particular function $f$.

Alan Turing

The obvious question now is which functions are Turing computable and can therefore be implemented on a Turing machine. If the function assigns outputs to inputs in a totally random way without rhyme or reason, we can't really hope to get it to work on a Turing machine, so let's restrict our attention to functions that assign an output to an input using a finite sequence of algorithms (i.e., a finite list of simple and mechanical instructions) — such functions are called computable.

Combining ideas of Turing computable functions and generally computable functions, we may easily convince ourselves that since the Turing machine seems to only involve three steps (moving left, moving right, replacing symbols, or halting) it is mechanical enough and so any function that is Turing computable is also computable. Likewise, since the Turing machine works in a mechanical fashion, perhaps any algorithm for computing a function is expressible as a program on a Turing machine; that is, computable functions are also Turing computable — if this is true, then any reasonably mechanical calculation can be implemented on a Turing machine.

The idea that computable functions are computable on a Turing machine is called the Church-Turing thesis, acknowledging the work of the American mathematician Alonzo Church. Alas, no one has been able to verify this seemingly true observation. This is partly because it's quite hard to pin down what exactly we mean by a more general computable function.

Brains as computers?

Assuming that the Church-Turing thesis holds (many researchers do anyway, and some have even considered using it as a defi nition!), it follows that all computers — Windows, iMac, you name it — are e ffectively modelled by Turing machines. Making an even greater intellectual leap, if we see the brain or mind as eff ectively a supercomputer of sorts, then what follows is that our mind is a Turing machine.

How does our brain work?

Some have suggested that there is increasing evidence for the truth of this. I recently attended a sharing session by Shaun Martin, a former mathematician, who introduced me to the "cognitive revolution" — the coming together of many disciplines including psychology, biology, philosophy, linguistics, anthropology and computer science, with the aim of understanding human cognition. The revolution provides pristine perspectives on the centuries-old question of "What is the mind"? (Interested readers might wish to visit this Wikipedia article.) And as we have just seen, there is a mathematical side to this endeavour as well.

As Martin pointed out, modern research from multiple disciplines, most notably psychology and experimental philosophy, seems to suggest a sort of underlying universal framework upon which every human mind is based. For example, experiments suggest that our brains are wired to respond in two different manners: quickly and intuitively, versus slowly and rationally. See the further reading list below for some books and articles that support this idea. If our responses are hard-wired, then presumably they could be mimicked by a sufficiently sophisticated computer.

If there is so much empirical data supporting this vantage point, then perhaps unsurprisingly there are also detractors who argue that the mind is more than a set of algorithms or functions. In the 1980s the American philosopher John Searle imagined a scenario, now known as the Chinese room thought experiment, in which a computer runs a set of instructions in Chinese translation so well so that it is perceived by passers-by as a real human being. Regardless, for Searle this does not mean that the computer possesses a mind or consciousness in the sense that humans do. That is, there is a fundamental distinction between the human mind and mere computer programs. (More arguments may be found in the articles listed in the further reading list below.)

We hitherto do not know enough to decide which theories we ought to perceive as correct. (I definitely don't.) Shaun Martin grinned delightfully at us when he talked about the mind as a Turing machine. Clearly, his beautiful mathematical insight into this multidisciplinary venture is that perhaps, while maybe the mind isn't all about algorithms, at least some part of it could be (the universal aspects as far as research reveals them to exist). And so it boils down to figuring out what these fundamental algorithms are that govern our existence, and what implications their discovery might have towards bigger questions concerning humanity.

Further reading

- Read more about Turing machines on Plus

- The blank slate by Steven Pinker

- Thinking, fast and slow by Daniel Kahneman

- Zoon Politikon: The evolutionary roots of human sociopolitical systems by Herbert Gintis and Carel van Schaik

- The fundamental distinction between brains and Turing machines by Andrew Friedman

- Morality is a culturally conditioned response by Jesse Prinz

About the author

Alan Aw is a maths enthusiast from Singapore who also loves running, reading, and thinking. He served in the Singapore army and will be studying at Stanford University in 2014.

Comments

Anonymous

How can anyone be sure that any Algorithm is: Precise, unambiguous mechanical efficient correct procedure ?

Perhaps I have missed the decisive and conclusive proof on this.

Anonymous

Algorithms are precise, unambiguous and mechanical by definition. To know for sure that an algorithm is correct for a given task, one has to prove it. Of course there is no single proof for all algorithms, but one has to prove the correctness of each algorithm separately.