This is an experimental venture by the LMS, in collaboration with Plus magazine, hoping to provide a wide range of readers with some idea of exciting recent developments in mathematics, at a level that is accessible to all members as well as undergraduates of mathematics. These articles are not yet publicly accessible and we would be grateful for your opinion on the level of the articles, whether some material is too basic or too complex for a general mathematical audience. The plan is to eventually publish these articles on the LMS website. Please give your feedback via the Council circulation email address.

The notion of distance is an intuitive one, hard-wired into our brains. Without it we would not be able to move through the world; to hunt, gather, or perform any meaningful task using the opposable thumbs nature has provided us with. This is reflected in mathematics: besides counting, geometry, the study of spatial extent, is the earliest mathematical pursuit humanity has engaged in.

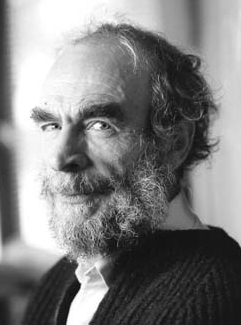

Mikhail Gromov came up with a brilliant way of defining distance between abstract spaces.

The distance between points in space is one thing, but we would also like to quantify the resemblance of shapes. We think of similar things as close — the faces of siblings may closely resemble each other, but can we say how far apart they are? Pinning down this type of distance exactly is tricky because there can be many aspects to compare. In the case of faces, do we want to measure the similarity of the nose, the eyes, some kind of average of the two, or neither? When it comes to measuring similarity, choices have to be made.

A step towards defining a metric to measure similarity is to place your objects into an ambient space. Facial recognition technology represents photos of faces as points in $N$ dimensional Euclidean space, where $N$ is the number of pixels in the photo and each pixel is represented by a numerical value indicating its colour. With pictures represented by points in an ambient space, it is then possible to analyse distances between them.It is a common situation in mathematics to need to pursue abstraction — to rid our objects of an ambient context and make them stand on their own. When Bernhard Riemann developed the notion of manifolds back in the 19th century, his aim was to consider mathematical spaces intrinsically, independently of what other spaces they might be embedded in. How do we now measure the similarity of two such spaces without re-inserting them into an ambient space?

In 1981 the Russian-French mathematician Mikhail Gromov came up with a way of defining distance between abstract spaces that has been brilliantly successful in a number of very different contexts. The metric he defined, known as the Gromov-Hausdorff distance, ties together areas as diverse as Einstein's general theorem of relativity and abstract group theory and topology, and it plays an important role in Perelman's proof of the Poincaré conjecture. It was one of the many achievements that earned Gromov the 2009 Abel Prize. But before we explore his ingenious idea let's recap some basic mathematical notions of space and distance.

Metrics, spaces and metric spaces

A metric space is a set $M$ together with a function $d: M \times M \rightarrow \mathbb{R}$ which defines the distance between two elements of $M$. The function $d$ must be symmetric, satisfy the triangle inequality, and assign a distance of zero to a pair of points if and only if the points are equal.In the taxicab metric the blue, red and yellow paths between the two points all have the same length. The green path indicates Euclidean distance.

There is also a notion of compactness for a general topological space that does not involve a notion of distance. A topological space is compact if every cover of the space by open sets has a finite sub-cover. For metric spaces the two definitions of compactness are equivalent (see here for a proof).

Big and small

You can define all sorts of metrics on a topological space and it often makes sense to look for one which has some attractive geometric property such as minimising curvature in some sense. The need to minimise or maximise quantities comes up in many contexts. The best known example of such an extremal problem is provided by legend. When the soon-to-be Queen Dido arrived on the shores of North Africa having fled Tyre and her murderous brother, she asked the local ruler to give her as much land as could be enclosed by a single ox hide. She then proceeded to cut the hide into thin strips, tied them together at their ends and arranged the long strip that resulted in an arc of a circle meeting the coast at right angles. The ancient city of Carthage was founded on the terrain thus enclosed and was ruled happily by Dido until another calamity (love) befell her. If legend is to be believed then Dido had solved the isoperimetric problem: arrange a curve of a given length so that it encloses a maximal area.

Queen Dido and her lover Aeneas.

Over 2,500 years after Dido supposedly performed her clever trick mathematicians realised that extremal problems can carry a deep physical meaning. The French mathematician Pierre Louis Maupertuis (1698-1759) was one of the first to pronounce that "nature is thrifty": physical processes (such as a body moving from A to B) that could in theory take a range of different paths will always take the one that minimises something called action, a quantity that measures the effort involved.This principle of least action has explanatory as well as predictive power: Leonhard Euler showed that it implies Newton's laws of motion. In the same spirit, and as we shall see later, Albert Einstein sought a variational principle to develop his own theory of gravity, which superseded Newton's.

In maximising or minimising a quantity you must bear in mind that the maximum or minimum may not exist. (Think of finding an ellipse of unit area whose minor axis is minimal.) What you need is a criterion ensuring that a sequence of objects for which the desired quantity gets closer and closer to its maximum or minimum does itself tend to some allowable limit. In variational problems you are often seeking to maximise or minimise an aspect of a family of functions — for example the area under their graphs — defined on a metric space. To do this, a notion of convergence of functions is crucial: if a sequence of functions converges to a limiting function, then one can hope that the aspect under consideration has a limiting value too.

There is an obvious notion of pointwise convergence: a sequence $\{f_n\}$ of functions defined on a common domain and with a common target converges to a function $f$ pointwise if for all $x$ in the domain the values $f(x_n)$ converge to $f(x)$. This is not a very useful concept, as a pointwise convergent sequence of continuous functions may converge to almost anything — something wildly discontinuous. A stronger notion is \emph{uniform convergence}, which requires that the speed of convergence does not get too slow as $x$ varies over the domain. If a family of functions has the property that any bounded infinite subfamily contains a subsequence which converges uniformly on compact subsets of the domain, then it is called \emph{normal}. The subsequences must then converge to \emph{continuous} functions. The point of a normal family is that though it is an infinite-dimensional space of functions it has the characteristic Bolzano-Weierstrass property of finite-dimensional Euclidean spaces. We would like to know what property of a family of continuous functions will ensure that it is normal. This crucial property is \emph {equicontinuity}. Looking at a point $x$ in the domain and a function $f$ in the family, we know that for any $\epsilon$, sufficiently small balls around $x$ map entirely into the $\epsilon$-ball around $f(x)$. Just how small that ball centred on $x$ needs to be is a measure of how much the function $f$ expands things around the point $x$ — how big its derivative is, if it is differentiable. If there is a uniform bound for the expansion -- if for a given $\epsilon$ there's a limit to how small the ball around $x$ needs to be — simultaneously for all functions $f$ and all points $x$ — then the family is said to be \emph{equicontinuous}. The Arzel\`a-Ascoli theorem states that a family of continuous functions between two compact metric spaces is normal if and only if it is equicontinuous. This is particularly useful in complex analysis, where the derivative of a holomorphic function is bounded in terms of the function itself by Cauchy's integral formula. For holomorphic functions between domains in the complex plane the Arzel\`a-Ascoli theorm gives us \emph{Montel's theorem}, which states the family is normal if it is uniformly bounded: that is, there is a number $N>0$ such that $|f(z)|

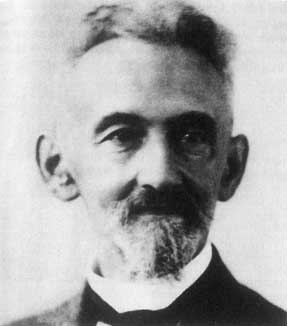

Bernhard Riemann (1826 - 1866) came up with the notion of manifolds and also with a (flawed) proof of the Riemann mapping theorem.

An interesting application of these results is the \emph{Riemann mapping theorem}, first stated and proved by Bernhard Riemann in his PhD thesis in 1851. It states that any simply-connected domain $U$ of the complex plane can be mapped holomorphically and bijectively to the unit disc $\mathbb D = \{z \in \mathbb C : |z| Distance and similarity

Make a nice drawing for Hausdorff distance.

Matching shapes

A small Hausdorff distance between two sets means that the sets are not only close to each other in the ambient space, but also that they are in some sense similar metric spaces. (Only "in some sense", because the subset $S_n$ of the unit interval $I = [0,1]$ formed by the $n+1$ points $\{0,1/n,2/n,3/n, \ldots , 1\}$ has distance $1/2n$ from $I$, and converges to $I$ as $n \to \infty$.) In any case, the converse is far from true. Two sets $X$ and $Y$ could be identical in shape, for example they could be two circles with the same radius, but they could still be far away from each other in terms of Hausdorff distance, for example if the circles are centred on different points. To decide how similar the two shapes are irrespective of where they are located in space we need another notion of distance — one that doesn't need any ambient space at all. To derive it, start with some intuition. Suppose you have a perfectly round circle $X$ drawn on a transparency and a slightly squashed circle $Y$ drawn on a second transparency. To see just how much $Y$ is deformed compared to $X$ you can glue the transparency with $X$ on it to your table top and then slide the $Y$ transparency around until you get the best fit.

Felix Hausdorff (1868-1942).

As we mentioned in the introduction the Gromov-Hausdorff metric has proved incredibly useful. In the next article we will examine one of its applications as a vital tool in the proof of a major conjecture, not in geometry, but in abstract group theory.