This article is adapted from a chapter of Steven Brams' book, Game theory and the humanities: Bridging two worlds, published by The MIT Press and reproduced here with kind permission. The book has been reviewed in Plus.

Blaise Pascal, 1623-1662.

Is it rational to believe in a god? The most famous argument in favour of belief is that of Blaise Pascal, known as Pascal's wager. Assuming that we'll never know whether or not God exists, at least not in this world, Pascal advised that a prudent and rational person should believe. If it turns out that God does exists, then this strategy comes with a reward of infinite bliss in the afterlife. If God doesn't exist, the penalty is neglible: a few years of self-delusion. By comparison, if one believed that God did not exist, but this turned out to be false, one would suffer an eternity of torment. Faced with these options it's rational to believe.

Pascal's approach does not assume that God gives us any indication of his (for Pascal God was male) existence during our lifetime. But what if there is a god, or some sort of a superior being, who does interact with us in this world? A superior being that plays games with us? In this article we take a rather unusual approach, asking if mathematical game theory can help shed some light on these questions.

To explore the rationality of belief in some superior being, we will use the revelation game. In this game, the superior being, who we'll denote by SB, has two strategies: to reveal itself, which establishes its existence, and not to reveal itself. Similarly, the humble human, who we'll denote by P, has two strategies: to believe in SB, or not to believe in SB.

This gives four possible outcomes, described in the following table:

| P | |||

|---|---|---|---|

| Believe | Don't believe | ||

| SB | Reveal | P faithful with evidence | P unfaithful despite evidence |

| Don't reveal | P faithful without evidence | P unfaithful without evidence | |

Which of the four outcomes do P and SB favour? Let's assume that each player has a primary and a secondary goal. SB's primary goal is for P to believe in its existence and its secondary goal is not to reveal itself. P's primary goal is to have some evidence about SB's existence or non-existence, while P's secondary goal is to believe.

I assume that P is neither an avowed theist nor an avowed atheist but a person of scientific bent, who desires confirmation of either belief or non-belief. Preferring the former to the latter as a secondary goal, P is definitely not an inveterate skeptic. What SB might desire is harder to discern. Certainly the god of the Hebrew bible very much sought untrammeled faith and demonstration of it from his people. The justification for SB's secondary goal is that remaining inscrutinable is SB's only way of testing its subjects' faith.

If the reader disagrees with my attribution of preferences, I invite him or her to propose different goals and to redo the analysis. In this manner, the robustness of the conclusions I derive can be tested.

The goals listed above suggest a ranking of SB's outomes: its favourite outcome is for P to believe without revelation (we'll give this a 4 on SB's preference scale), followed by P believing and SB revealing itself (a 3 on the scale), followed by P not believing and SB not revealing itself (a 2 on the scale), followed by P not believing and SB revealing itself (a 1 on the scale).

For P the ranking is: P believing and SB revealing itself (4), P not believing and SB not revealing itself (3), P believing and SB not revealing itself (2) and P not believing and SB revealing itself (1). We indicate the prefereces by pairs of numbers, eg (1,2), where the first number describes SB's ranking and the second one P's ranking:

| P | |||

|---|---|---|---|

| Believe | Don't believe | ||

| SB | Reveal | P faithful

with evidence (3,4) | P unfaithful despite evidence (1,1) |

| Don't reveal | P faithful without evidence (4,2) | P unfaithful

without evidence (2,3) | |

What are SB and P's best strategies? For SB it makes sense not to reveal itself: this strategy is better whether P selects belief (because SB prefers (4,2) to (3,4)) or nonbelief (because SB prefers (2,3) to (1,1)). For SB, not revealing itself is what is called a dominant strategy. Assuming that P knows SB's preferences, she can work out that nonrevelation is SB's dominant strategy. Not having a dominant strategy herself, but preferring (2,3) to (4,2), she will choose not to believe as the best response.

These strategies lead to the selection of (2,3): SB does not reveal itself and P does not believe. The outcome (2,3) is a Nash equilibrium of the game, named after John Nash, the mathematician made famous by The beautiful mind. It's considered an equilibrium because no player has an incentive to move away from it by itself.

Note, however, that (3,4) - P believes and SB reveals itself - is better for both players than (2,3). But it is not a Nash equilibrium because SB, once at (3,4), has an incentive to depart from (3,4) to (4,2). Neither is (4,2) an equilibrium, because once there, P would prefer to move to (2,3). And of course, both players prefer to move from (1,1).

Thus, if the players have complete information and choose their strategies independently of each other, game theory predicts the choice of (2,3): no revelation and no faith. Paradoxically, this is worse for both players than (3,4). This is also the paradox in game theory's most famous game, the prisoner's dilemma, although the details of the two games differ.

The theory of moves

Classical game theory assumes that players make their choices simultaneously, or at least independently of one another. However, it may be more realistic to assume that games do not start with simultaneous strategy choices. Rather, play begins with the players already in some state, for example outcome (2,3) in our table above. The question then becomes whether or not they would benefit from staying in this state or departing from it, given the possibility that a move will set off a series of subsequent moves by the players. One way of capturing this is through the theory of moves (TOM) (which we've also explored in the Plus article Game theory and the Cuban missile crisis). The rules of TOM are as follows:

John Nash won the 1994 Nobel Prize in Economics for his work in game theory. Image: Peter Badge / Typos1.

- Play starts at an initial state (one of the four possible outcomes in the table).

- Either player can unilaterally switch its strategy (make a move) and thereby change the initial state into a new state, in the same row or column as the initial state.

- The other player can then respond by unilaterally switching its strategy, thereby moving the game to a new state.

- The alternating responses continue until the player whose turn it is to move next chooses not to switch its strategy. When this happens, the game terminates in a final state, which is the outcome of the game.

These rules don't stop the game from continuing indefinitely. So let's add a termination rule:

- If the game returns to the initial state, it will terminate and the initial state becomes the outcome.

After all, what is the point of continuing the move-countermove process if play will, once again, return to square one? (We'll see later what happens when we change this rule.)

A final rule of TOM is needed to ensure that both players take into account each other's calculations before deciding to move from the initial state:

- Each player takes into account the consequences of the other's rational choices, as well as its own, in deciding whether or not to move from the initial or any subsequent state. If it is rational for one player to move but not the other, then the player who wants to move takes precedence. So the player wanting to move can set the process into motion.

Applying TOM to the revelation game throws up an obvious question: how can SB switch from revealing itself to un-revealing itself? Once SB has established its existence, can it be denied? I suggest that it can, but only if one views the revelation game as played out over a long period of time. Any revalatory experience may lose its immediacy and therefore its force over time and doubts may begin to creep in. Thus, a new revelatory act becomes necessary.

In TOM, if players have complete information about their opponent's preferences, they can look ahead and anticipate the consequences of their moves and thereby decide whether or not to move. They can do this using backwards induction. Here is an example:

| P | ||||

|---|---|---|---|---|

| Believe | Don't believe | |||

| SB | Reveal | (3,4) | \leftarrow | (1,1) |

| \downarrow | \uparrow | |||

| Don't reveal | (4,2) | \rightarrow | (2,3) | |

Backwards induction shows that it's rational for SB to move from the initial state (2,3).

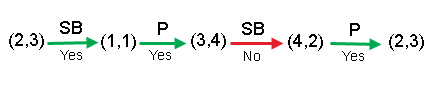

Suppose the initial state is the Nash equilibrium (2,3): no revelation and no faith. Would it be rational for SB to switch its strategy and reveal itself? If it does, then this may spark off a series of moves and countermoves. To find out if the initial move by SB is rational, we need to know where this series would end. So we look at the last possible move in such a series. Because of rule 5, this would be the move from (4,2) back to (2,3) after one cycle. It would be P's turn to decide whether to make this move. Since (2,3) leaves P better off than (4,2), we can assume that P would indeed make this move.

What about the previous move, from (3,4) to (4,2), which would correspond to SB's turn? SB would be better off moving from (3,4) to (4,2), but it knows that P would then move to (2,3), which leaves SB worse off. Thus, SB would not make the move from (3,4) to (4,2).

Would P make the previous move from (1,1) to (3,4)? It knows that SB would leave things at (3,4), but that's better for P than (1,1), so she would.

Now we're back to the intial state (2,3). SB knows that if it moves to (1,1), then P moves to (3,4), where the sequence would terminate. And since (3,4) is better for SB than (2,3), it is rational for SB to move from the intitial state (2,3).

Would it be rational for P to move from the initial state (2,3)? A similar analysis shows that it would not. Hence, by rule 6, SB's desire to move takes precedence and sets the game rolling from the initial state (2,3) to end in the state (3,4) — a non-myopic equilibrium. Thus, when we allow players to take turns in switching their strategies it is indeed rational to move away from the Nash equilibrium to an outcome that's better for both players: for P to believe and SB to reveal itself.

We can do the same analysis for the three other possible intitial states. It shows that (3,4) is the non-myopic equilibrium except when the initial state is (4,2). In this case, (4,2) is itself the non-myopic equilibrium.

Round and round

You might argue that there is no obvious starting position in the revelation game, so that the termination rule above (rule 5) is unrealistic. So let's replace it with a new termination rule:

- A player will only stop switching its strategy if it reaches the position that gives it its highest payoff.

| P | ||||

|---|---|---|---|---|

| Believe | Don't believe | |||

| SB | Reveal | (3,4) | \leftarrow | (1,1) |

| \downarrow | \uparrow | |||

| Don't reveal | (4,2) | \rightarrow | (2,3) | |

A quick look at our table shows that once the revelation game is set moving in an anti-clockwise direction, it will continue to cycle in that direction forever, as no player ever receives its best payoff when the game moves anti-clockwise and it has the next move. But is this termination rule realistic? Players only stop if they receive their best payoff, so this rule means that they may move from a preferred state to a worse state. In particular, SB will move from (2,3) to (1,1) under this rule. This may look strange, but it makes sense if SB hopes to be able to out-last P, so that continual cycling may enable SB to force its preferred outcome. In other words, the rule makes sense if SB has moving power.

With it, SB can induce P to stop at either (4,2) or (1,1). P would prefer (4,2), which gives SB its best payoff: P's belief without evidence satisfies both SB's goals. Thus, SB's moving power will make it rational for SB to keep switching its strategy until P finally gives up.

However, P only obtains his or her next-worse payoff in the final state (4,2), satisfying only the secondary goal of believing, but not the primary goal of having evidence to support this belief. Thus, if P after a while recovers her strength, then she may set the cycle in motion again.

What does this analysis tell us? It suggests that there may be a rational basis for P's belief being unstable. Sometimes the evidence of SB's existence manifests itself and sometimes not. Over a lifetime, P may move back and forth between belief and nonbelief as seeming evidence appears and disappears.

We can broaden this perspective if we think of P not as an individual, but as a society spanning many generations. There are periods of religious revival and decline, which extend over generations and even centuries, that may reflect a collective consciousness about the presence or absence of an SB. For many today, this is an age of reason, in which people seek out a rational explanation. But there is also evidence of religious reawakening (for example, as occurred during the crusades), which follows periods of secular dominance.

This ebb and flow is inherent in the cycling moves of the revelation game. Even if an SB, possessed with moving power, exists and is able to implement (4,2), this is unlikely to stick. People's memories erode after a prolonged period of nonrevelation. The foundations that support belief may crumble, setting up the need for new revelatory experiences and starting the cycle all over again.

About this article

This article is adapted from a chapter of Steven Brams' book, Game theory and the humanities: Bridging two worlds, ©MIT 2011, published by The MIT Press and reproduced here with kind permission. The book has been reviewed in Plus.

Steven J. Brams is Professor of Politics at New York University and the author, co-author, or co-editor of 17 books and about 250 articles. His recent books include two books co-authored with Alan D. Taylor, Fair Division: From Cake-Cutting to Dispute Resolution and The Win-Win Solution: Guaranteeing Fair Shares to Everybody; and Mathematics and Democracy: Designing Better Voting and Fair-Division Procedures (which has been reviewed in Plus). He is a Fellow of the American Association for the Advancement of Science, the Public Choice Society, and a Guggenheim Fellow. He was a Russell Sage Foundation Visiting Scholar and has been President of the Peace Science Society (International) and the Public Choice Society.