Information is bits.

We send information over the internet every day. All that information, whether it's a birthday email or your homework, is in encoded into sequences of 0s and 1s. What's the best way of doing this? The way that uses the least amount of 0s and 1s and therefore requires the smallest amount of computer memory.

Let's do a back-of-the-envelope calculation. Suppose we want to encode the 26 (lower case) letters of the alphabet, plus an additional symbol that denotes a space: each of the 27 symbols is to be represented by a string of 0s and 1s. How long do these strings need to be? Clearly they must be long enough to give a total of at least 27 distinct strings, so that we can associate them uniquely to our 27 symbols. There are $2^m$ strings of length $m$ (because we have two choices for each entry in the string) so we need $$2^m \geq 27.$$ Solving for $m$ gives $$m \geq \log_2{27} \approx 4.75.$$ This suggests that we need 5 bits per character to encode each of our 27 symbols. You can make a similar calculation for the 45,000 characters of the Mandarin language, giving $$\log_2{45,000} \approx 15.46$$ and suggesting that you need 16 bits per character to encode them. We still don't know the exact code to use, but at least we know how heavy it's going to be on our computer memory.Exploiting patterns

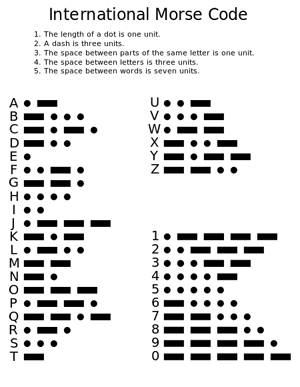

The calculation above is neat, but we can do better. "Any reasonable [code] would take advantage of the fact that some letters, like the letter "e" in English, occur much more frequently than others," explains Scott Aaronson, a computer scientist at the Massachusetts Institute of Technology. "So we can use a smaller number of bits for those." It's an idea that's been used in Morse code for over 150 years: here the more common letters are encoded using shorter strings of dots and dashes than the rarer ones.

.

bits per character. That's the same calculation as the one above. In particular, we need $\log_2{4}$ bits to identify the B and the C. For the letter A, the number $\log_2{4}$ is overkill: we don't actually need to distinguish between the two colours. So let's deduct the number of bits needed to distinguish between two different characters (corresponding to the two different colours), which is $\log_2{2}.$ This gives $$\log_2{4} - \log_2{2} = \log_2{\frac{4}{2}} = \log_2{2}$$ bits to represent the A. The average number of bits per symbol is now $$\frac{1}{2}\log_2{2} + \frac{1}{4}\log_2{4} + \frac{1}{4}\log_2{4} = 1/2 + 1/2 + 1/2 = 3/2=1.5$$ That's the same number we got before, which is reassuring. But we also notice that each term in the sum above is the frequency of one of the characters (e.g. 1/2 for A) times the logarithm of 1 divided by the frequency (e.g. $\log_2{2}$ for A). And it turns out that exactly the same way of thinking works in general for an alphabet of $n$ symbols. Writing $p_1$ for the frequency of symbol 1, $p_2$ for the frequency of symbol 2, and so on, an estimate for the average number of bits needed per symbol is $$p_1 \log{(1/p_1)} + p_2 \log{(1/p_2)} + p_3 \log{(1/p_3)} + ... + p_n \log{(1/p_n)}.$$

The birth of the bit

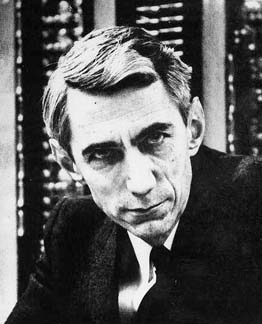

Thinking of information in terms of bits — 0s and 1s — seems like something that firmly belongs to the modern era, but the idea has been around for over 60 years. It was the mathematician Claude Shannon who in 1948 popularised the word "bit", which is shorthand for "binary digit". Shannon also came up with the formula we found above. Given an alphabet of $n$ symbols and a probability distribution telling you the probability $p_i$ with which a symbol $i$ occurs in a text made out of those symbols, the number

$$H = p_1 \log{(1/p_1)} + p_2 \log{(1/p_2)} + p_3 \log{(1/p_3)} + ... + p_n \log{(1/p_n)}$$

Claude Shannon.

is called the entropy of the distribution (see this article to find out more). Shannon proved that the average number of bits needed per symbol cannot be smaller than the entropy, no matter how cleverly you encode them. For the vast majority of alphabets and distributions, the average number of bits needed is in fact only slightly bigger than the entropy. (One encoding that comes very close to Shannon's entropy for the vast majority of alphabets and distributions is the so-called Huffman code.)

If you're in the business of sending messages long-distance, the entropy is a useful number to know. If you know you can transmit some number $C$ bits per second, and that the symbols in your message require around $H$ bits per symbol on average, then you'd guess that you can transmit around $C/H$ symbols per second on average. Shannon showed that this is indeed correct: by picking a clever way of encoding your symbols you can guarantee an average transmission rate that's as close to $C/H$ per second as you like. Conversely, no matter how you encode your symbols, it's impossible to transmit at a faster rate than $C/H.$ This neat fact is knows as Shannon's \emph{source coding theorem}.Shannon published this result in A mathematical theory of communication, "A paper that is justly celebrated as one of the greatest of the twentieth century," says Aaronson. It's a highly theoretical effort. The source code theorem, for example, doesn't tell you which code gets you within a specified distance of the maximal rate of C/H per second — it only says that there is one.

When it comes to practical applications, the theorem also has a major short-coming: it assumes that your communication channels transmit pristine messages and never introduce any errors. In real life this just doesn't happen: whether you send a message in a telegram, via email or using SMS, there is always a chance it is scrambled on the way and arrives at the other end corrupted. This is where things get interesting. How do you deal with so-called noisy channels and how much information can you transmit? Find out in the next article.

About this article

Marianne Freiberger is co-editor of Plus. She would like to thank Scott Aaronson, a computer scientist at the Massachusetts Institute of Technology, for a very useful conversation about information. And she recommends the book Information Theory: A Tutorial Introduction by James V. Stone as a mathematical introduction to the subject.

This article is part of our Information about information project, run in collaboration with FQXi. Click here to find out about other ways of measuring information.