Watch our conversation with Martin Hairer below

For an idea we are all familiar with, randomness is surprisingly hard to formally define. We think of a random process as something that evolves over time but in a way we can’t predict. One example would be the smoke that comes out of your chimney. Although there is no way of exactly predicting the shape of your smoke plume, we can use probability theory – the mathematical language we use to describe randomness – to predict what shapes the plume of smoke is more (or less) likely to take.

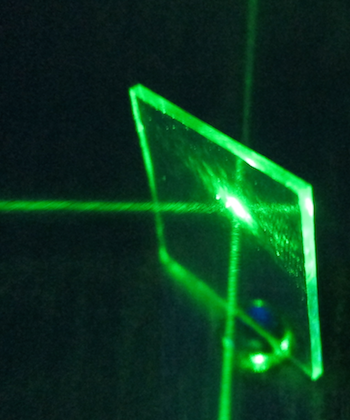

A beamsplitter

Smoke formation is an example of an inherently random process, and there is evidence that nature is random at a fundamental level. The theory of quantum mechanics, describing physics at the smallest scales of matter, posits that, at a very basic level, nature is random. This is very different to the laws of 19th century physics, such as Newton's laws of motion (you can read more about them here ). Newton's physical laws are deterministic: if you were god and you had a precise description of everything in the world at a particular instant, then you could predict the whole future.

This is not the case according to quantum mechanics. Think of a beam splitter (a device that splits a beam of light in two); half of the light passes through the beam splitter, and half gets reflected off its surface.What would happen if you sent a single photon of light to the beam splitter? The answer is that we can't know for sure. Even if you were completely omniscient and knew everything about that photon and the experiment, there's no way you could predict whether the photon will be reflected or not. The only thing you can say is that the photon will be reflected with a probability of 1/2, and go straight with probability of 1/2. (You can read more about quantum mechanics here.)

Insufficient information

Most of the times we use probability theory, it's not because we are dealing with a fundamentally random process. Instead, it's usually because we don't possess all the information required to predict the outcome of the process. We are left to guess, in some sense, what the outcome could be and how likely it is that different outcomes happen. The way we make or interpret these "guesses" depends on which interpretation of the concept of probability you agree with.

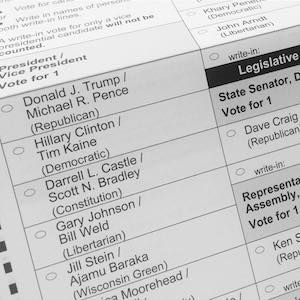

One of the 2016 US presidential ballots (Photo Corey Taratuta CC BY 2.0)

The first interpretation is subjective – you interpret a probability as a guess, made before the event, of how likely it is that a given outcome will happen. This interpretation is known as Bayesian, after the English statistician Thomas Bayes, who famously came up with a way of calculating probabilities based on the evidence you have.

In particular, Bayes' theorem in all its forms allows you to update your beliefs about the likelihood of outcomes in response to new evidence. An example are the changing percentages associated with the outcomes of certain candidates winning future elections. It was a widely held belief that Hillary Clinton would win the US presidential election in 2016: going into the polls it was estimated she had a 85% probability of winning. As information about the poll became available, this belief was continuously updated until the likelihood reached 0%, indicating certainty that she lost.

The other interpretation of probabilities is objective – you repeat the same experiment many times and record how frequently an outcome occurs. This frequency is taken as the probability of that outcome occurring and is called the frequentist interpretation of probabilities. (You can read more about these two interpretations in Struggling with chance.)

Statisticians often argue about who is right: proponents of the first interpretation (the Bayesians) and those who believe more in the second interpretation (the frequentists). (For example, what would a frequentist interpretation of the probability of Clinton’s election chances be? You can't repeat an election multiple times!)

Agnostic statistics

As mathematicians we try to keep out of these arguments, we have the luxury of brushing these questions to the side. We only care about the maths of the theory of randomness, and that theory is fine! There is absolutely no controversy from a mathematical point of view and we have a well-defined theory of how to work with probabilities.

For example, suppose I had a six-sided die. Assuming the die is fair, we'd say the probability of rolling any particular number, say a 6, was 1/6. If I wanted to roll an even number, that is, a 2, a 4 or a 6, then I can add the probabilities of these three outcomes together as they are mutually exclusive:

P(even number) = P(2 or 4 or 6) = 1/6 + 1/6 + 1/6 = 1/2 .

And if we had two dice and wanted to know the probability of rolling two 6s, then we would multiply the probabilities of the individual outcomes, as these outcomes are independent:

P(two 6s) = P(6 and 6) = 1/6 x 1/6 = 1/36 .

The maths for working with probabilities is completely well defined, regardless of your statistical religion. There are, however, two important concepts that we must consider when understanding probabilities – symmetry and universality – which we will explore in the next articles.

About this article

Martin Hairer

Martin Hairer is a Professor of Pure Mathematics at Imperial College London. His research is in probability and stochastic analysis and he was awarded the Fields Medal in 2014. This article is based on his lecture at the Heidelberg Laureate Forum in September 2017.