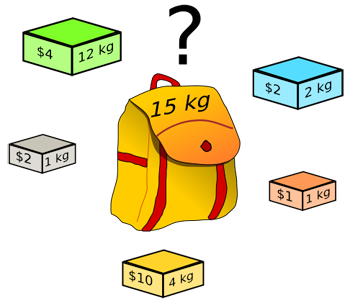

Imagine you're going to see your friends on the other side of the world and you've bought them so many presents, you can't fit them all into your luggage — some have to be left behind. You decide to pack a combination of presents that is highest in value to your friends, but doesn't exceed the weight limit imposed by the airline. How do you find that combination?

The knapsack problem. Image: Dake, CC BY-SA 2.5.

The obvious thing to do is to try all possible combinations, calculate their value and weight, and pick one that is below the weight limit but maximises value. This is fine if there are only a few items, but gets hard when many are involved: there are simply too many combinations to try out and your plane is leaving soon. The question is whether there is an algorithm — a recipe for finding a solution — which works for any number of items and doesn't take too long even when many items are involved.

This kind of problem interests computer scientists because creating algorithms, mechanical procedures a computer can execute, is what computer science is all about. Computer scientists have a way of measuring the complexity of a problem in terms of how fast the time it takes to solve the problem grows with the size of the input. For example, suppose the size of the input (the number of presents) is  If the time it takes to solve the problem grows roughly like

If the time it takes to solve the problem grows roughly like  or

or  or

or  or

or  for any other natural number

for any other natural number  as

as  gets larger, then the problem is deemed "easy". The number

gets larger, then the problem is deemed "easy". The number  could still grow fast with

could still grow fast with  if

if  is large, of course, but this kind of polynomial growth, as it's called, is nowhere near as explosive as exponential growth, described, for example, by the expression

is large, of course, but this kind of polynomial growth, as it's called, is nowhere near as explosive as exponential growth, described, for example, by the expression  . (See here for a more technical definition of so-called polynomial time algorithms.)

. (See here for a more technical definition of so-called polynomial time algorithms.)

So does such a polynomial time algorithm exist for our problem? There's a slightly easier decision version of the problem. Rather than asking "what is the optimal combination of presents that stays under the weight limit but maximises value", we ask "given a particular value V, is there a combination that stays within the weight limit and has a value exceeding V?". This problem has the nice property that given a potential solution (a combination of presents) it's very easy to check that it's correct: you only need to add up the weights and values, and you can do that in polynomial time. However, whether or not a polynomial time algorithm exists to solve the problem from scratch isn't known. This puts the decision version of the knapsack problem into a class of problems called NP.

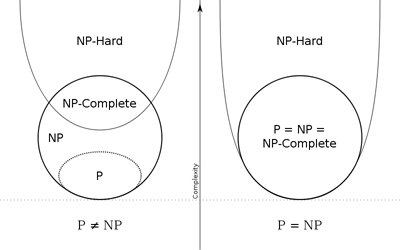

The hierarchy of complexity classes. The class P contains all those problems that can be solved in polynomial time. The class NP contains all those problem for which a potential solution can be checked in polynomial time. It's possible that the NP class is equal to the P class, though nobody knows if it is, which is why there are two diagrams: one for the case that P=NP and one for the case that it isn't. NP complete problems are within the NP class, but particularly hard, and NP hard problems are at least as hard as NP complete ones. Image: Behnam Esfahbod, CC BY-SA 3.0.

The decision version of the knapsack problem also has another amazing feature: if you do find a polynomial time algorithm to solve the decision knapsack problem, then you'll be able to derive a polynomial time algorithm for every problem in the NP class, which would be quite something. This feature means that the decision version of the knapsack problem belongs to a subclass of NP problems called NP complete. (Confused? See the diagram on the left.)

And the full knapsack problem? Well, it's at least as hard to solve as the decision version of the problem. Even given a potential solution, there's no known polynomial time algorithm that can tell you whether the solution is correct. But as for the decision version of the problem, if you do find a polynomial time algorithm to solve the full knapsack problem, then you'll be able to derive a polynomial time algorithm for every problem in the NP class. The knapsack problem is a so-called NP hard problem.

Optimisation problems such as the knapsack problem crop up in real life all the time. Luckily there are efficient algorithms which, while not necessarily giving you the optimal solution, can give you a very good approximation for it. Thus, the question of whether the knapsack problem can be solved in polynomial time isn't that interesting for practical purposes, rather it's something that enthuses theorists. When it comes to delivering presents, however, our advice is not to buy too many in the first place.

You can find out more about the complexity of algorithms on Plus.

Comments

solution

Convert it to a linear programing problem to be solved using the simplex method.

Let there be a variable for every present, and constrain every variable to lie between 0 (do not pack) and 1 (do pack). The weight limit is another linear constraint, and the total value of presents packed is the linear objective function.

Granted that the optimal solution may (and probably will) involve a fractional number for various presents, and you may ask, "how can I pack 2/5 of a given present, when I can only either pack the whole thing or not pack any of it?" Well, the next step is to transform the whole space, including you fractional optimal solution, so that the hyperplane (flat space of one fewer dimensions than the number of presents) that corresponds to the optimal level (which contains your optimal solution point) contains all of the transformed coordinate axes except for the last one, and what were the feasible integer vertices (that corresponded, for every present, to either packing it or not packing it, and that did not cause the total allowable weight to be exceeded) are transformed to points on one side of that hyperplane, and have a positive last coordinate, and the non-feasible integer vertices are transformed to the other side, and have a negative last coordinate. Then the true integral optimal solution will be integer vertice that was transformed to the point with the minimum positive coordinate.

correction of mistake in my last post about problem's solution

I submitted a comment on how this problem could be solved as a linear programming problem, using the simplex method. Every present could be a variable, ranging from 0 (don't pack) to 1 (do pack), that the weight limit could be an additional constraint, and that the total value could be the objective function to maximize.

The optimal solution would likely involve fractional values for every present variable, and only integer solutions (0 or 1) are possible, and I added that the entire space could be essentially rotated (and translated) so that the hyperplane containing the optimal value is transformed to the hyperplane with the equation that the last coordinate is 0, and all of the integral feasible points that met the total weight constraint would be mapped to points with the last coordinate positive. Then I said that of all the images under the rotation/translation of the integral feasible points, the optimal integer solution would correspond to the image point with the lowest positive value for the last coordinate.

Well, I confused the hyperplane of the optimal total value with the hyperplane of the maximum possible weight. So I accordingly modify my algorithm. Instead of the rotation/translation, use a linear (or affine) transformation that maps the hyperplane of the optimal value to that with the equation that the last coordinate is 0, and that also maps the hyperplane of the maximum allowable weight to the hyperplane with the equation that the second last coordinate is 0, and such that a positive last coordinate indicates the total value is less than the optimal and a negative last coordinate indicates the total value is greater than the optimal, and such that a positive second last coordinate indicates an allowable total weight, and finally such that the absolute value of the last coordinate is the difference between the total value and the optimal value (there is at least one such transformation such that all this is possible).

Finally, of all the images under the linear/affine transformation of the integral feasible points, the optimal integer solution will be the one with the positive second last coordinate and the minimum positive last coordinate.