Why quantum mechanics?

Towards the end of the 19th century people thought that physics was done and dusted. Elegant theories described all the natural phenomena people had observed, and only minor details needed tidying up. Over the next few decades, however, alarming cracks started to open up in the theory. Observations showed that, when examined at a very small scale, nature just didn't behave as people thought it should. Quantum mechanics was developed to explain this newly emerging picture. To give you an idea of what was going on, we'll have a brief look at two of those awkward new discoveries.

A prism splitting white light into its constituent frequencies. But can light really be thought of as waves?

Schizophrenic light

One of them concerned the nature of light. Since the 1870s physicists had been certain that light travelled in waves — electomagnetic waves to be precise (see here for more). It's an idea you are probably familiar with. Waves of different frequencies correspond to light of different colours, and when many waves of different frequencies mix up, the light we see is white.The explanation of light as electromagnetic waves was, and still is, hailed as a great advance in physics, but there were problems. One of them came up when you shone a beam of light at a metal. As expected, the light would knock electrons out of the metal, but it did so in a way a wave wouldn't be able to. This phenomenon is called the photoelectric effect (see Light's identity crisis to find out more) and it puzzled people for some time.

It was Albert Einstein who, in 1905, discovered a solution to the problem. He suggested to think of light, not as waves, but as tiny little packets (or quanta) of energy. This new way of looking at things perfectly explained the photoelectric effect, but it didn't replace the wave picture of light. In order to explain all phenomena involving light, you need to hang on to the wave picture too. Light is like waves in some ways, and it's like particle-like packets in others. It just can't seem to make up its mind.

Collapsing atoms

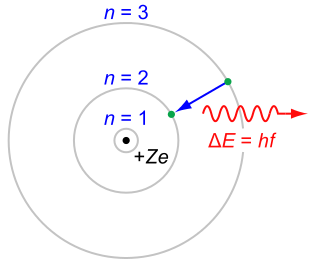

The Bohr model of the atom. Electrons are only permitted to be at certain distances from the centre. Image: CC BY-SA 3.0.

Another new observation involved the structure of atoms. By the end of the 19th century physicists thought of atoms as looking like plum puddings: they contained negatively-charged electrons (the plums), surrounded by an evenly spread-out positive charge (the pudding). In 1911, however, the physicist Ernest Rutherford and some of his colleagues made a curious discovery while firing tiny positively-charged particles, called alpha particles, at a thin gold film. According to the plum pudding picture, the particles should simply have sailed through the film. But to their great surprise, the researchers saw some of them bounce off. This, Rutherford said later, was as curious "as shooting a cannon ball at a piece of tissue and having the cannon ball bounce back."

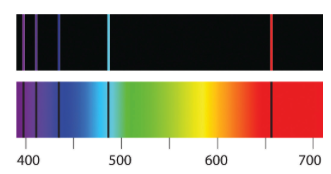

The top shows the emission spectrum of the hydrogen atom — notice there are discrete lines, rather than a continuous spectrum. The bottom shows the absorption spectrum. Image: ChemWiki, CC BY-NC-SA 3.0 US.

Rutherford's subsequent explanation was as simple as it was clever. If, rather than being spread out, the positive charge in the gold atoms was concentrated at their centre, it would then be strong enough to deflect the occassional alpha particle unlucky enough to hit that centre. Rutherford's idea lead to the "solar system" model of the atom with positive charge at its centre, orbited by negatively-charged electrons.

The trouble with this, however, is that electrons orbiting a nucleus should, according to theory, continually radiate away some of their energy. The loss of energy would in turn lead to the electrons spiralling into the nucleus, causing the atom to eventually collapse in on itself. What is more, the pattern of energy that would be radiated away would be continuous, looking nothing like what people had observed in reality, that is, in the sharply defined lines of the energy spectrum of the hydrogen atom first observed in 1885.

The solution to this conundrum was delivered in 1913 by Niels Bohr. Bohr suggested that electrons could not orbit the nucleus of an atom at any distance they liked: only certain distances were allowed. When an electron loses energy, it doesn't gradually inch inwards towards the nucleus, but performs a leap — a quantum jump — to the next allowed distance. When it has reached the smallest allowed distance, it can't go any nearer the nucleus and stays where it is. The atom therefore remains stable. Bohr's model also had another advantage: it explained the discrete spectrum of the hydrogen atom mentioned above, which had hitherto puzzled people.

The new phenomena and their explanations showed that something was deeply amiss in the classical theories. Nature had never before been thought to "make jumps" or go in for schizophrenic behaviour. It was clear that a brand-new theory was needed, one which could take these strange new behaviours in its stride and didn't need any band aids to patch up gaps. That theory is quantum mechanics. You can find out more in this series of articles.

About this article

Marianne Freiberger is Editor of Plus.