Convex is complex

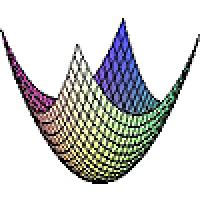

A convex function. Image: Eli Osherovich.

Convex or concave? It's a question we usually answer just by looking at something. It's convex if it bulges outwards and concave if it bulges inwards.

But when it comes to mathematical functions, things aren't that simple. A team of computer scientists from the Massachusetts Institute of Technology have recently shown that deciding whether a mathematical function is convex can be very hard. It's a result that's not just of interest to mathematicians, but also to engineers and anyone else in the business of optimising things. But luckily, the team have also found a way around this problem that works in most real-life situations.

A continuous function is convex if the area above its graph is a convex set, in other words if the straight line that connects any two points on its graph lies above the bit of the graph between these two points. More formally, a function $f$ is convex if for all points $x_1$ and $x_2$ and for all $t$ with $0 \leq t \leq 1$ we have $$f(tx_1+(1-t)x_2)\leq tf(x_1)+(1-t)f(x_2).$$ A function $f$ is concave if $-f$ is convex. (These definitions also work for functions of several variables, that is functions $f:\mathbb{R}^n \rightarrow \mathbb{R}$, as long as f is defined on the points in question. In this case $x$ should be understood as a vector made up of the variables involved.)

The graph of a convex function of two variables (top) and the graph of a non-convex function of two variables (bottom).

This is the question addressed by Amir Ali Ahmadi, Alex Olshevsky, Pablo A. Parrilo, and John N. Tsitsiklis in their paper. The team only looked at a class of functions that are relatively easy to deal with, namely polynomials. These functions are sums of several terms, where each term is a product of the variables, each raised to some power, and then multiplied by a constant, for example:

$$f(x)=x^4+2x^3+5x^2-6x+9,$$ and $$f(x,y)=x^8+7xy^2+2y^3+9.$$ The degree of each individual term is the sum of the powers of the variables, and the degree of the whole polynomial is the maximum of the degrees of the individual terms. In their paper the researchers only looked at polynomials with an even degree, since checking for convexity in the odd degree case is easy. The question wasn't how to check whether a given polynomial is convex --- there's a well-known technique for doing this --- but how long such a check would take for an arbitrary polynomial. Clearly the problem gets harder the more terms there are in the polynomial, so we expect the answer to depend on the number of terms, which in turn is related to the number of variables. In complexity theory the "time" it takes to solve a problem is measured in terms of the number of steps a computer needs to make to complete the task. This depends on how many components the problem has to start with. For example, to find the largest of a list of $n$ numbers, you need to look at each individual number and compare it to the largest one you've found so far. There are $n$ steps in this algorithm, so we say that its execution time is proportional to $n$. Other problems may have an execution time proportional to $n^2$, $n^3$, or $n^k$ for some other positive number $k$. This of course means that the execution time increases quite rapidly as $n$, the size of the initial problem, gets bigger. But it's nothing compared to an execution time that grows exponentially with $n$, for example one proportional to $2^n$. Algorithms whose execution time is proportional to some polynomial in $n$ are called polynomial time algorithms. They are considered to be relatively fast. The class of problems that can be solved by such algorithms is known as P.

Knowing if a function is convex can help with minimisation problems, like minimising the fuel usage of a car.

But there is also another class of problems which is denoted by NP (standing for nondeterministic polynomial time). They've got the property that if anyone hands you the answer to the problem, you can check in polynomial time if it's correct. But can you solve these problems from scratch in polynomial time? Nobody knows for sure. This is the subject of the famous P=NP problem, which is one of the biggest open questions in maths. But although nobody has found a proof yet, the general hunch is that you can't: solutions to NP problems can be verified, but not found, in polynomial time. In other words, P is not equal to NP.

Now let's go back to the problem of convexity. The question was how the execution time of an algorithm that checks an arbitrary polynomial for convexity grows as the number of terms in the polynomial grows. What the researchers showed in their paper is that the problem is NP-hard. This means, loosely speaking, that it's at least as hard as any problem in the NP class. So unless someone proves against expectations that P=NP, we can assume that the question "is it convex?" can't be answered for an arbitrary polynomial in a time efficient way. As the number of variables in the polynomial increases, the time it takes to answer the question explodes.

But luckily there is a loophole. There is another property, called sum-of-squares convexity, which can be checked in polynomial time. Any polynomial that's sum-of-squares convex is also convex. For most polynomials you're likely to encounter in real-life problems, this also works the other way around: if they are convex, they are also sum-of-squares convex. So in these instances it's enough to look for sum-of-squares convexity. This gives ground for optimism: perhaps the hardness of the convexity problem is due to a few very awkward examples of polynomials which hopefully won't turn up in real-life applications.