Information, decisions and bits

This article is part of our Information about information project, run in collaboration with FQXi. Click here to see other articles from the project.

The article is adapted from James V. Stones' recent book Information Theory: A Tutorial Introduction.

We're all familiar with units: a minute is a chunk of time, a metre is a chunk of length, and a bit is a chunk of information. But hang on a second — what do we mean by a "chunk of information"? Why do bits provide the right kind of chunk, and how do they relate to binary digits, that is, to 0s and 1s?

Finding a route, bit by bit

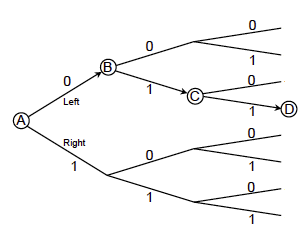

A bit is the amount of information you need to choose between two equally probable alternatives. Imagine you are standing at the fork in the road at point A in the figure below, and that you want to get to the point marked D. Note that this figure represents a bird's-eye view, which you do not have; all you have is a fork in front of you, and a decision to make. If you have no prior information about which road to choose then the fork at A represents two equally probable alternatives. If I tell you to go left then you have received one bit of information. If we represent my instruction with a binary digit (0=left and 1=right) then this binary digit provides you with one bit of information, which tells you which road to choose.

In fact, binary digits, that is 0s and 1s, can be used to represent the whole journey from A to D. Imagine that you stroll on down the road and you come to another fork, at point B in the figure. Again, because you have no idea which road to choose, a binary digit (1=right) provides one bit of information, allowing you to choose the correct road, which leads to the point marked C.

One bit of information corresponds to a choice between two equally probable alternatives.

A third binary digit (1=right) provides you with one more bit of information, which allows you to again choose the correct road, leading to the point marked D.

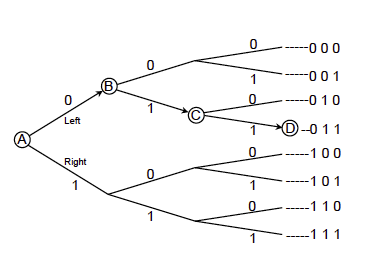

There are now eight roads you could have chosen from when you started at A, so three binary digits (which provide you with three bits of information) allow you to choose from eight equally probable alternatives; 8 happens to equal $2 \times 2 \times 2 = 2^3$. Note that the decision taken at A excluded half of the eight possible destinations shown in the figure that you could have arrived at. Similarly, the decision taken at each successive fork in the road halved the number of remaining possible destinations.A journey of eight alternatives

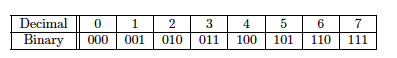

We can restate this in more general terms if we use $n$ to represent the number of forks, and $m$ to represent the number of final destinations. If you have come to $n$ forks, then you have effectively chosen from $$m = 2^n$$ final destinations. Because the decision at each fork requires one bit of information, $n$ forks require $n$ bits of information, which allow you to choose from $2^n$ equally probable alternatives.We could label each of the eight possible destinations with a decimal number between 0 and 7, or with the equivalent binary number, as in the figure below. These decimal numbers and their equivalent binary representations are shown in the table. Counting in binary is analogous to counting in decimal. See here to find out how to do it.

The binary representation of numbers has many advantages. For instance, the binary number that labels each destination (e.g.011) explicitly represents the set of left/right instructions required to reach that destination. This representation can be applied to any problem that consists of making a number of two-way (i.e. binary) decisions.

A journey of log2(8) decisions

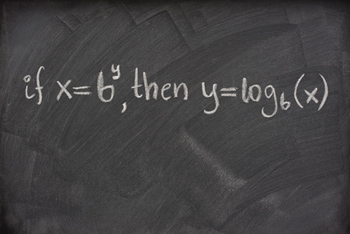

The complexity of any journey can be represented either as the number of possible final destinations or as the number of forks in the road which must be traversed in order to reach a given destination. We know that as the number of forks increases, so the number of possible destinations also increases. As we have already seen, if there are three forks then there are $8 = 2^3$ possible destinations.

The logarithm measures the number of decisions.

Now that we know about logarithms, we can summarise your journey from a different perspective, in terms of bits:

If you have to choose between $m$ equally probable alternatives, then you need $n = \log_2{m}$ bits of information.A million answers to twenty questions

Navigating a series of forks in the road is, in some respects, similar to the game of 20 questions. In this game your opponent chooses a word (usually a noun), and you (the astute questioner) are allowed to ask twenty questions in order to discover the identity of this word. Crucially, each question must have a yes/no (i.e. binary) answer, and therefore the answer provides you with at most one bit of information.

Why at most? By analogy with the navigation example, where each decision at a road fork halved the number of remaining destinations, each question should halve the number of remaining possible words. A question to which you already know the answer is a poor choice of question. For example, if your question is, "Is the word in the dictionary?", then the answer is almost certainly, "Yes!", an answer which is predictable, and which therefore provides you with no information.

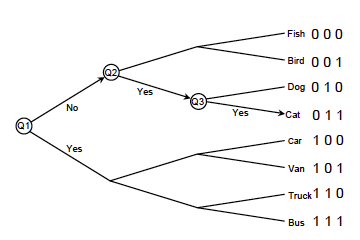

Conversely, a well-chosen question is one to which you have no idea whether the answer will be yes or no — there is a 50:50 chance of it being either. In this case, the answer provides exactly one bit of information. The cut-down version of 20 questions in the figure below shows this more clearly.

In this game your opponent has a vocabulary of exactly eight words, and you know which words they are. Your first question (Q1) could be, "Is it inanimate?", and the answer should halve the number of possible words to four, leading you to your second question (Q2). If your second question (Q2) is, "Is it a mammal?", then the answer should again halve the number of possible words, leading to your third question (Q3). By the time you arrive at Q3, there are just two possible words left, and after you have asked the third question (e.g. "Is it 'cat'?"), your opponent's yes/no response leads you to the correct answer. In summary, you have asked three questions, and excluded all but one out of eight possible words.

Mammal or not?

More realistically, let's assume your opponent has the same vocabulary as you do (most of us have similar vocabularies, so this assumption is not entirely unreasonable). Specifically, let's assume this vocabulary contains exactly 1,048,576 words. Armed with this knowledge, each question can, in principle, be chosen to halve the number of remaining possible words. So, in an ideal world, your first question should halve the number of possible words to 524,288. Your next question should halve this to 262,144 words, and so on. By the time you get to the 19th question there should be just two words left, and after the 20th question, there should be only one word remaining.

The reason this works out so neatly is because twenty questions allow you to choose from exactly $1,048,576 = 2^{20}$ equally probable words (i.e. about one million). Thus, the $20$ bits of information you have acquired with your questioning provide you with the ability to narrow down the range of possible words from about 1 million to just one. In other words, $20$ questions allow you to find the correct word out of about a million possible words. It has been estimated that the average person has a vocabulary of less than 20,000 words, so twenty well-chosen questions should easily suffice to win the game. Adding one more question would not only create a new game, 21 questions, it would also double the number of possible words (to about 2 million) that you could narrow down to one. By extension, each additional question allows you to acquire up to one more bit of information, and can therefore double the initial number of words. In principle, a game of 40 questions allows you to acquire $40$ bits of information, allowing you to find one out of $2^{40} \approx 10^{12}$ words. In terms of the navigation example, $40$ bits would allow you to navigate $40$ forks in the road, and would therefore permit you to choose one out of about a trillion possible routes. So the next time you arrive at your destination after a journey that involved $40$ decisions, remember that you have avoided arriving at a trillion-minus-one incorrect destinations.Information, bits and binary digits

Claude Shannon, the father of information theory.

Despite the fact that the word "bit" is derived from "binary digit", there is a subtle, but vital, difference between them. A binary digit is the value of a binary variable, where this value can be either a 0 or a 1, but a binary digit is not information per se. In contrast, a bit is a definite amount of information. Bits and binary digits are different types of entity, and to confuse one with the other is known as a category error.

To illustrate this point, consider the following two extreme examples. At one extreme, if you already know that you should take the left-hand road from point A, and I show you the binary digit 0 (=left), then you have been given a binary digit but you have gained no information. At the other extreme, if you have no idea about which road to choose and I show you a 0, then you have been given a binary digit and you have also gained one bit of information. Between these extremes, if someone tells you there is a 71% probability that the left-hand road represents the correct decision and I subsequently confirm this by showing you a 0, then this 0 provides you with less than one bit of information (because you already had some information about which road to choose). In fact, it can be shown that when you receive my 0, you gain precisely half a bit of information (you can see a proof in Chapter 5 of Information Theory: A Tutorial Introduction). Thus, even though I cannot give you a half a binary digit, I can use a binary digit to give you half a bit of information.

Sadly, in modern usage, the terms bit and binary digit have become synonymous. David MacKay proposed that the unit of information should instead be called the Shannon, after Claude Shannon, the father of information theory. You can find out more about him and his work here.

About the author

James V. Stone is a Reader in Computational Neuroscience at the University of Sheffield, England. This article is adapted from his recently published book Information Theory: A Tutorial Introduction.

Comments

Anonymous

Good stuff - I shall try and remember the difference between a bit and a binary digit!