What happens inside your computer?

I turn on my laptop. I open the file for this article. I start typing: the letters appearing with each keystroke, building to words, sentences and paragraphs. I save the file. I scroll back to reread. I move the cursor to edit the text: deleting some, moving some, typing more. I save the file, I scroll back to reread, I edit some more, until I feel this article is done, at which point I'll upload it to our website.

That's my view of the events taking place on my computer as I write this article. I'm aware that behind each of these events complex operations are occurring: in the text-editing software, the computer's operating system, the machine code that translates between software and hardware. And underlying it all, this text ultimately exists as strings of 0s and 1s, manipulated and stored as strings of binary digits, physically encoded into the computer's circuitry.

Computers are an interface between theory and physical reality; they operate both on a theoretical level of programming languages and data, and on the nuts-and-bolts level of the computer's hardware. They are a pinnacle of discoveries in physics and engineering, but also in the mathematics and logic of computer science. Where does the realm of one end and the other begin?

The notion of an event

"What distinguishes computer science from physics? It's the notion of an event." explains Leslie Lamport, principal researcher at Microsoft Research and winner of the 2013 Turing Award. To understand this difference, consider how a computer scientist and physicist would view the behaviour of a flip-flop (a circuit that is used to store a single bit of data – representing either a zero or a one – and can vary between these two values).

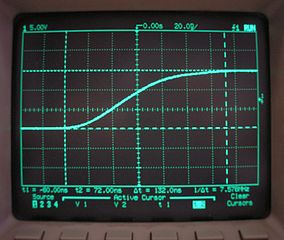

Screen of oscilloscope showing the change in voltage

"If you're a physicist [you'd] put an oscilloscope on that flip-flop," says Lamport. (An oscilloscope is an instrument that measures change in voltage of a circuit over time, plotting the signal on a screen.) "You'll see that it's not exactly zero, it's sort of close to zero, maybe wriggling a little." Then when the flip-flop starts to flip, the line on the oscilloscope will start to rise. "Eventually, in, what to some physicists might seem like a long time, maybe a tenth of a nanosecond or so, it stabilises to approximately one." The physicist views the flipping of the flip-flop as a continuously changing physical process that occurs over a duration of time.

A computer scientist on the other hand, sees things very differently. "In computer science we abstract that physical process, of changing from zero to one, into a single event. We're not concerned with what is happening during the actual length of time that that event is occurring. If there is no interaction with whatever else is going on during that time we pretend that that event happens as an indecomposable atomic event."

Computer science is concerned with such atomic, indecomposable events. What constitutes an event may depend on the purpose of the researcher: the atomic event may be the flipping of a flip-flop; it may be the receipt of a message; it may be a computation that takes several microseconds or perhaps even a minute. A digital system is then a collection of these atomic events. The key is that the events are discrete, each isolated from whatever else is happening in the system.

Practical abstraction

This representation is an abstraction from reality, but it serves an important purpose. "We're not creating art," says Lamport. "We're trying to understand the systems we build." And what the researchers are trying to understand decides the level of abstraction they will use.

Consider the example of the receipt of a message. "[On a hardware level this] involves electrical signals propagating along wires, suddenly being received and processed, and all of this electrical stuff that I have no idea of what is going on," says Lamport. On the other extreme, at level of the operating system, you could view the receipt as a single event: the operating system says, 'Here is this message, it has just arrived'. In between there is the software level, where you could view the receipt of a message in different ways. For example, if the message arrives over the internet, it will have been broken up into packets and the receipt of the message is actually a collection of events at a yet lower level, still.

An event in computer science is an abstraction of the physical reality and the level of abstraction depends on what you are trying to understand. But, at whatever level you are working on, the key concept is that you can view an event as discrete and indecomposable. This understanding of events underlies one of Lamport's significant contributions to computer science – solving the problem of time in distributed systems – and has unexpected parallels in Einstein's special relativity, as you'll find out in the next article.

About this article

This article is part of our Stuff happens: the physics of events project, run in collaboration with FQXi. Click here to see more articles and videos about the role of events, special relativity and logical clocks in distributed computing.

Rachel Thomas is Editor of Plus. She interviewed Leslie Lamport in September 2016 at the Heidelberg Laureate Forum.