Information: Baby steps

This article is part of our Information about information project, run in collaboration with FQXi. Click here to find out about other ways of measuring information.

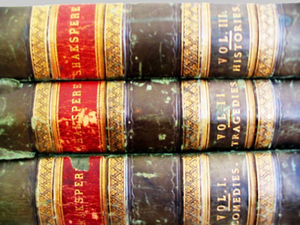

How much information is there in these?

Can you measure information? If you're looking at a telephone book, the number of entries might be a good guide as to how much information it contains. But when it comes to novels, or articles like this one, things get hazy. You could count the number of words, but I can say the same thing using many words, or few. And what if I use another language, or transmit my message in Morse code? Information seems detached from its physical representation, and trying to measure it objectively seems silly.

Yet, it's something that people have been trying to do ever since the 1920s. Back then various means of long-distance communication were already around — telegrams, telephone, the radio. To measure the capacity and efficiency of these transmission channels people needed an objective measure of information. As a result, they've come up with ideas that probe the nature of information, but also find practical applications in the devices we today use every day.

Excluding possibilities

To have any chance of measuring information, we first need to discard of what we hold most dearly about it: meaning. That's because meaning is highly subjective: the simple phrase "I love you" can change your life or leave you cold, depending on who it comes from. Let's instead think of a message simply as a string of symbols and see if we can measure its information content in some way that isn't just counting its length.

As an example, suppose I want to transmit the result of a coin flip to you, using a machine that can transmit the letter H for heads and T for tails. Imagine you have just received a T, indicating that I've flipped tails. How informative is this piece of information? Well, it's not that informative really, because there were only two possibilities; an H or a T. You could have guessed the outcome with a 50:50 chance of getting it right.

Ralph Hartley (1888 - 1970).

Image: RW Hartley.

Back in the 1920s the engineer Ralph Hartley took exactly this approach. Hartley pondered using the quantity $s^n$ itself as the measure of the amount of information in a string of length $n$ made from an alphabet of $s$ symbols. In our coin example, the information content of a string of ten Ts and Hs would therefore be $2^{10} = 1,024.$ Using an alphabet of 26 letters and a space, the information content of a string of ten letters would be $27^{10},$ which is a ginormous number.

Information rules

Thinking a little further, however, Hartley realised that, as a measure of information, the quantity $s^n$ has a fatal flaw. If we apply it to our coin example, we see that a string of length $n$ has information content $2^n$ while a string of length $n+1$ has information content $2^{n+1}.$ So adding an extra symbol to the string has added $2^{n+1}- 2^n = 2^n$ amount of information. This means that adding an extra symbol to a string of length $2$ adds only $2^2=4$ amount if information, while adding an extra symbol to a string of length $10$ adds $2^{10} = 1,024$ amount of information — the information added grows exponentially with the length of the string. But that's weird: why should an extra symbol carry much more weight when it is added to a long string than when it is added to a short string? Instead, Hartley thought, the information content should grow in direct proportion to the length of the string. If every symbol has an information content of, say, $k$, then a string of ten symbols should have an information content of $10 \times k.$ And a string of $n$ symbols should have an information content of $n \times k.$ Writing $I(s, n) $ for the information of the string of length $n$, we need \begin{equation} I(s,n) = n \times I(s,1).\end{equation} Since $s^n$ doesn't comply with this condition, it won't do as a measure of information. But Hartley specified a second rule that a measure of information should satisfy. Suppose I have two different machines to transmit messages, one that uses an alphabet of $s_1$ symbols (say $s_1=2$) and one that uses and alphabet of $s_2$ symbols (say $s_2 = 4.$) Suppose also that the first machine transmits strings of length $n_1$ (say $n_1=4$) and the second transmits strings of length $n_2$ (say $n_2 = 2$), and that $$s_1^{n_1} = s_2^{n_2}.$$ This means that the total number of strings that could be formed is the same for both machines ($2^4 = 4^2 = 16$ in the example). Since we have decided that information content hinges on that total number, our measure of information should allocate the same value to two strings produced on the two different machines. So we need \begin{equation} I(s_1, n_1) = I(s_2, n_2) \mbox{ whenever } s_1^{n_1} = s_2^{n_2}.\end{equation}

How much information is there in coin flips?

It's quite easy to show that there's only one type of mathematical function that satisfies both rules (1) and (2): it's the logarithm (see here for a short proof).

Thus, Hartley argued that the information content of a string of length $n$ made from an alphabet of $s$ symbol should be $$I(s,n) = \log{s^n}.$$ The base of the logarithm doesn't matter here, we could choose any we want. Since $$I(s,1) = \log{s}$$ our choice will determine the information content of a single symbol. By varying the base of the logarithm we can vary the content of a single symbol as we please. Let's just check that this really works. For any $s$ and $n$ the information in a single symbol is $I(s,1) = \log{s}.$ By rule (1) the information in a string of $n$ symbols should therefore be $\log{s} \times n,$ which it is, since $$I(s,n) = \log{\left(s^n\right)} = n \log{s}.$$ And clearly, if $s_1^{n_1} = s_2^{n_2}$ then $$I(s_1, n_1) = \log{\left(s_1^{n_1}\right)} = \log{\left(s_2^{n_2}\right)} = I(s_2,n_2),$$ so rule (2) is also satisfied.Hartley's information measure is neat because it works for any message, no matter how long and no matter which language or code it is written in. There is, however, a major flaw: Hartley's measure gives the same value to every symbol. You could well imagine situations, though, in which one symbol carries a lot more significance than another. It took another twenty years until the mathematician Claude Shannon took the first steps around this problem. His work was so fundamental that he became known as the father of information theory. Find out more in the next article.

About this article

Marianne Freiberger is co-editor of Plus. She would like to thank Scott Aaronson, a computer scientist at the Massachusetts Institute of Technology, for a very useful conversation about information. And she recommends the book Information Theory: A Tutorial Introduction by James V. Stone as a mathematical introduction to the subject.