New artificial intelligence tool for diagnosing Alzheimer's

Alzheimer's is an irreversible disease that starts slowly but progressively worsens. Diagnosing patients early gives the best chance of slowing the progression down and improving the patient's quality of life. Unfortunately, though, there is no simple test for diagnosing Alzheimer's. To find out whether a patient has the disease, doctors perform a range of different tests and try to rule out other conditions with similar symptoms. This involves a lot of work on the part of clinicians. To reduce their workload, a multidisciplinary team at the University of Cambridge have developed an automated method that can help with the diagnosis.

The technique developed by the team, which is led by mathematicians Angelica Aviles-Rivero and Carola-Bibiane Schönlieb, aims to diagnose the disease early on before any significant symptoms have appeared. Their machine learning framework makes use of the wide range of data that can be available for a patient, providing new techniques to analyse this rich data set and requiring less human input into the process. And testing this method against real patient data shows it outperforms current machine learning techniques for early diagnosis of Alzheimer's disease.

"We want to detect the disease as early as possible, to provide clinicians with a quick decision to provide treatment," says Aviles-Rivero. She is working with colleagues Christina Runkel and Carola-Bibiane Scönlieb from the Cambridge Mathematics of Information in Healthcare Hub (CMIH), together with Zoe Kourtzi, Professor of Experimental Psychology from the University of Cambridge, and Nicolas Papadakis from the Institut de Mathématiques de Bordeaux.

Making use of multiple sources of data

Many different types of patient information are relevant when diagnosing Alzheimer's disease. There are medical images, such as MRI and PET scans of a patient's brain (you can read more about these medical imaging techniques in the article Uncovering the mathematics of information). Other key information also comes from non-imaging data, such as genetic data, and personal information such as the patient's age.

One approach often used in machine learning is to analyse the relevant data to see whether it contains hidden structures that might help with the task at hand — in this case diagnosing a disease. This approach normally focuses on just one type of data, but Avilies-Rivero and her colleagues wanted to make use of all the different types of information that are available about individual patients. . "How can we merge this rich heterogenous data – these different modalities of data – and how can we extract a meaningful [understanding] of this data?"

The first step is to analyse each source of data separately. There are established techniques from image analysis that automatically identify key features in large sets of images. This could be the presence of certain patterns or geometric structures in the image, which, for these MRI images, might indicate the condition of certain regions of the brain in patients with Alzheimer's. These features define what is called the feature space – a high-dimensional mathematical space where each axis of the space corresponds to a particular image feature. The images are represented as points in this feature space so that images that share a particular feature are positioned close to each other in the direction of that axis in the feature space. (You can see an example of the use of this type of image analysis technique in the article Face to face.)

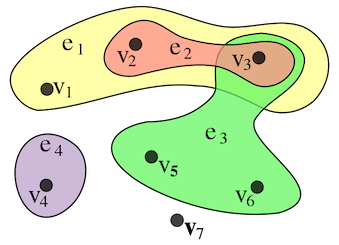

The researchers can use this type of representation of the MRI images to capture the relationships between different features in these images by building a hypergraph. An ordinary graph is just a network consisting of points, or nodes, that are connected by edges. A hypergraph has the advantage of capturing more complex connections between the nodes. Each edge in a graph connects exactly two nodes, but the hyperedges of a hypergraph can connect any number of nodes. The researchers connect the points that lie close together in the feature space with a hyperedge, uncovering any structure in the MRI data. And in the same way they can build a seperate hypergraph for the structure of the PET image data.

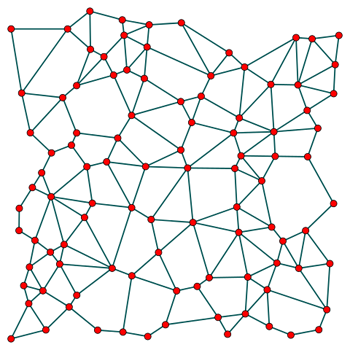

A complicated looking graph, where each edge relates exactly two nodes – represented by a line joining those two nodes.

Example of a hypergraph where the hyperedges can relate any number of nodes. Now the hyperedges (e1, e2, e3 and e4) are represented by coloured groupings, and can connect any number of nodes. (Image by Kilom691 – CC BY-SA 3.0)

When it comes to non-image data, such as genetic information, image analysis techniques obviously don't work, but Aviles Rivero and her colleagues were able to use appropriate mathematical measures of similarity to build hypergraphs for each of these different types of data too. Finally, all the hypergraphs for the different modalities of data – the imaging and non-imaging data – are joined together by linking all the different samples for each patient in a hyperedge.

The resulting multimodal hypergraph provides a way of capturing the incredibly rich connections between all the different types of data. "The hyperedges allow us to make higher-order connections in the data, to go beyond pairwise data relations," says Aviles Rivero.

Robust enough for the real world

"We want to develop automatic tools that are robust enough for diagnosing patients in a real clinical setting," says Aviles-Rivero. But in order to do this, these tools need to be ready to handle real life data.

For example, if the same person had two medical images taken, say two MRIs, these images won't necessarily be identical even though they should hold the same information about that patient's brain. Two MRIs could be slightly different if they were taken by different machines, or they might have slightly different orientations, or there might be small distortions in an image due to how it was handled or digitally saved.

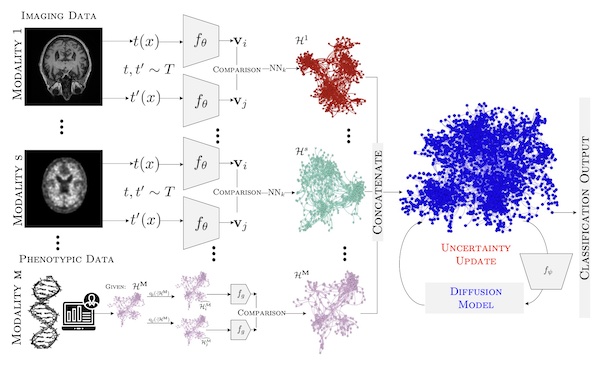

Brilliantly, the way the researchers build their mathematical representations of the patient data is inherently robust in the face of these real life data challenges. The framework automatically builds a representation of the image data as points in the corresponding feature space as described above. Then it repeats this process with a tweaked data set, where there have been small changes to the images. These resulting representations are compared using a machine learning method called contrastive self-supervised learning. This indicates how to finesse the process mapping the images to the feature space to ensure the final representation remains as similar as possible even if there are such small changes in the images. This means that they can be sure the hypergraph they build from this representation will accurately capture the meaning within the image data, regardless of any small differences in real life data.

A visual overview of the machine learning framework. (Image from the paper Multi-Modal Hypergraph Diffusion Network with Dual Prior for Alzheimer Classification, to be presented at MICCAI 2022 – used with permission.)

A slight change to the pixel data or the orientation of an image doesn't greatly change the meaning within the image as a whole. But slight changes to genetic data can greatly change the significance of that data, so a different adaptation to the process is needed to ensure the representation of the non-imaging data is also robust. Instead Aviles-Rivero and her colleagues test the result of making small changes to the respective hypergraphs themselves, and that these altered representations still retain the overall structure and meaning of the data.

"Our framework provides major advantages, one of which is the construction of a robust hypergraph." says Aviles-Rivero. "These technical advantages provide better performance for Alzheimer's disease diagnosis."

Starting with a smaller label set

So far the algorithm has worked, unsupervised, to extract meaning from the various data sets available. But the key task of diagnosing a patient does need some human input.

In machine learning algorithms, instead of explicitly teaching a computer how to do a task (in the sense of a traditional computer program) the computer learns by the experience of attempting to do the task itself many many times. (You can read an introduction to how this works in Maths in a minute: Machine learning and neural networks.)

One option – called supervised learning – would be to start with a set of data for patients that have already been assessed by a doctor for Alzheimer's disease. The algorithm can attempt to diagnose the patients based on this training data, and then compare its results against the correct diagnosis given by the doctor. (You can think of it as a bit like the computer being at school, checking its answers against those given by a teacher.) In this way the algorithm learns patterns in the data that indicate a patient has the disease.

Supervised learning does get good results but it takes large sets of training data labelled with the desired output. "Labelling training data is always time consuming, but is even trickier in the medical domain because of the clinical expertise that is required," says Aviles-Rivero. "[The problem] is how to develop [machine learning] tools that rely less on labelling."

Instead Aviles-Rivero and her colleagues have developed ingenious techniques for what is known as semi-supervised learning. In semi-supervised learning only a very small set of human-labelled training data is provided, relative to the larger set of unlabelled training data. (You can read more details in Maths in a minute: Semi-supervised machine learning.)

The algorithm then extracts as much structure as it can from the labelled and unlabelled data, in this case producing the robust multi-modal hypergraph for the patient data. Then the algorithm uses this structure to propagate the labels across the whole data set.

"We start with a tiny label set, just 15% of the whole data set is labelled," says Aviles-Rivero. "Then we take advantage of the connections in the data and propagate these labels to the whole hypergraph." You can imagine this as colouring each piece of the labelled data with a colour representing their particular diagnosis (say, green for "healthy", blue for "mild cognitive impairment" and red for "Alzheimer's disease"), and these colours then diffusing through the connections in the hypergraph to the unlabelled data, like a drop of coloured dye spreading through a piece of paper. The researchers use a new mathematical model of diffusion that is driven by a particular characteristic of the hypergraph. "This problem is not new but we propose a different way to solve the hypergraph diffusion."

Their semi-supervised strategy then loops through the two steps we have described – building the hypergraph from the data, then diffusing the labels through the hypergraph – with each step refining the next. "This is a hybrid model. It takes advantage of the mathematical modelling and the [machine] learning techniques," says Aviles-Rivero. "It takes the best of both worlds."

This new approach is more successful at diagnosing Alzheimer's disease than other machine learning strategies. Aviles-Rivero and her colleagues tested it on real world data taken from the Alzheimer's Disease Neuroimaging Initiative (ADNI) data set. They found that including all the different types of data, and capturing higher-order connections within this multi-modal data, meant their strategy performed better than any other.

"Our ultimate goal is to develop realistic robust techniques for clinical use. We work closely with a multidisciplinary team to achieve this," says Aviles-Rivero. "Our diagnosis tool is a first step towards an all-in-one framework for Alzheimer's disease analysis. Our next step is to further test our new technique with a larger cohort, and extend our framework for prognosis."

Alzheimer's is a devastating disease and going through the process of diagnosis is distressing for patients and their loved ones. The aim of the work of Aviles-Rivero and her colleagues is not to replace the clinicians who work with these patients. Instead the machine learning framework have developed aims to support clinicians, freeing up valuable time for those tasks only humans can perform.

About this article

Angelica Aviles-Rivero is a Senior Research Associate at the University of Cambridge, where she is a member of the Cambridge Mathematics of Information in Healthcare Hub and the Cantab Capital Institute for the Mathematics of Information. She will be presenting the paper Multi-Modal Hypergraph Diffusion Network with Dual Prior for Alzheimer Classification at MICCAI 2022.

Rachel Thomas is Editor of Plus. She interviewed Aviles-Rivero in August 2022.