Easy as pi?

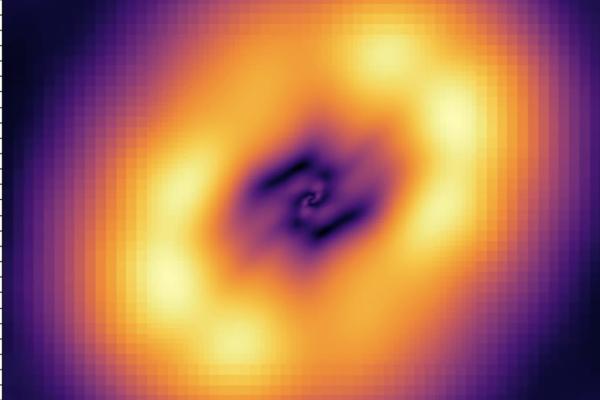

This article explores this most beautiful of numbers. Find out its definition, why its value can only ever be approximated, what it has to do with waves, and why it contains the history of the Universe.

This article explores this most beautiful of numbers. Find out its definition, why its value can only ever be approximated, what it has to do with waves, and why it contains the history of the Universe.

One of the most important mathematicians of her time, Noether also proved a fundamental result in physics.

To avoid full school closures in the next pandemic, or even epidemic, epidemiologists need crucial information from schools, students, and parents.

There's a romantic vision of mathematicians only needing pen and paper for their work. Here's why this is far from the truth when it comes to mathematical modelling, used to solve problems in the real world.

Combining AI with human knowledge of physics may lead to powerful applications in a range of areas — from weather forecasting to engineering.

We explore the maths that helps explain this well-known phenomenon, which says that any two people around the world are likely to be connected through a surprisingly short chain of acquaintance links.

When a new infectious disease enters a population everything depends on who catches it — superspreaders or people with few contacts who don't pass it on. We investigate the stochastic nature of the early stages of an outbreak.

Hannah Fry will join us at the University of Cambridge in January as Cambridge's first Professor for the Public Understanding of Mathematics!

Physicists have figured out how we might detect hypothetical boson stars. If we do, then this would count as a major step towards solving the riddle of dark matter,

Frederick Manners has won a prestigious EMS Prize at the European Congress of Mathematics 2024 for, among other things, a problem involving pyjamas.

Tom Hutchcroft has won a prestigious EMS Prize at the European Congress of Mathematics 2024, for work on mathematical models that can help us figure out phase transitions.

Richard Montgomery has won a prestigious EMS Prize at the European Congress of Mathematics 2024 for work on objects so ubiquitous in everyday life it's easy to forget they're mathematical: networks.